We can take an example with the log volume of each host and build summary rows and columns using unions and joins, respectively. This can give us the opportunity to perform certain calculations like percentage of total, or more advanced statistical analysis on your unstructured data to include a summary row at the bottom of a table.

Columns:

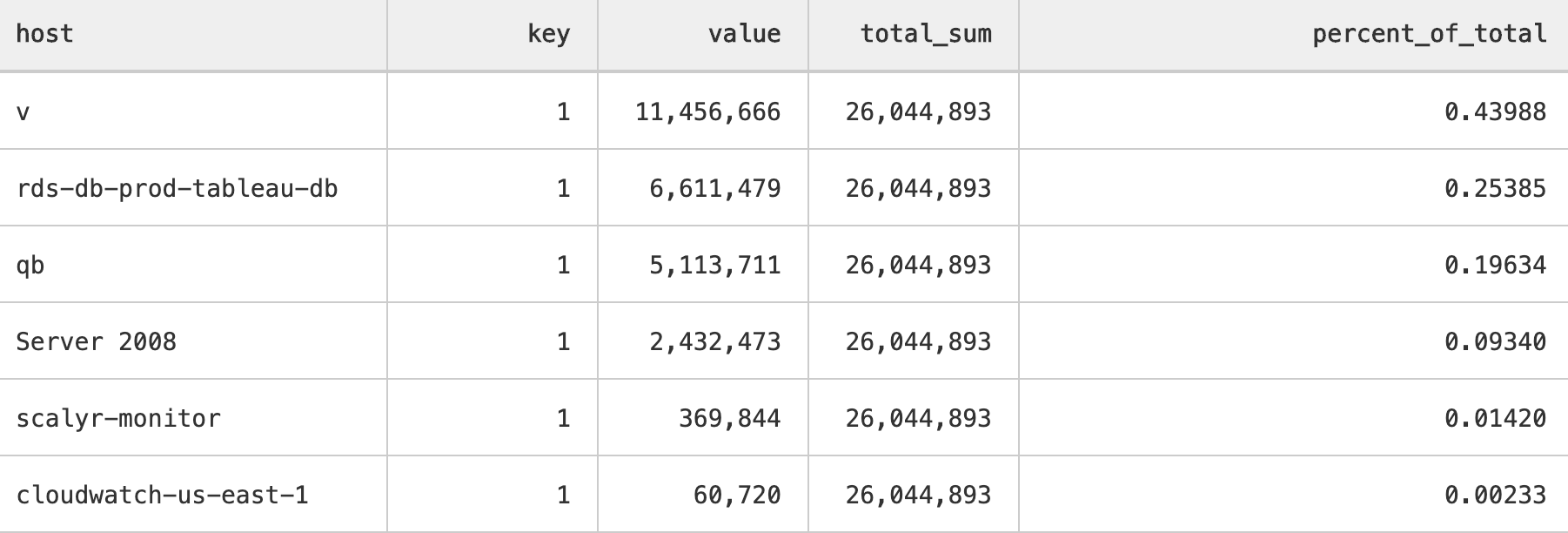

In this example, we will use one query to get log volume by host, and in the other, we will get the sum of all hosts’ volume and place it in a column next to each group.

|join

a = (

tag='logVolume' metric='logBytes'

|group value = sum(value) by host, key = 1

|sort -value

),

b = (

tag='logVolume' metric='logBytes'

|group total_sum = sum(value) by key = 1

)

on

key

Note: See the key = 1 that we are statically assigning. This ensures that row will retain the value.

Now we can perform computation of aggregate and non aggregate values|let percent_of_total = value/total_sum

You could also use the columns command so that the key and total_sum columns do not appear, if you’d like.

Rows:

In this example we will use one query to get log volume by host, and in the other, we will get the sum of all hosts’ volume and place it in a row appended to the bottom

|union

(

tag='logVolume' metric='logBytes'

|group value = sum(value) by host

|sort -value

),

(

tag='logVolume' metric='logBytes'

|group value = sum(value)

|columns value, host = "ALL HOSTS"

)

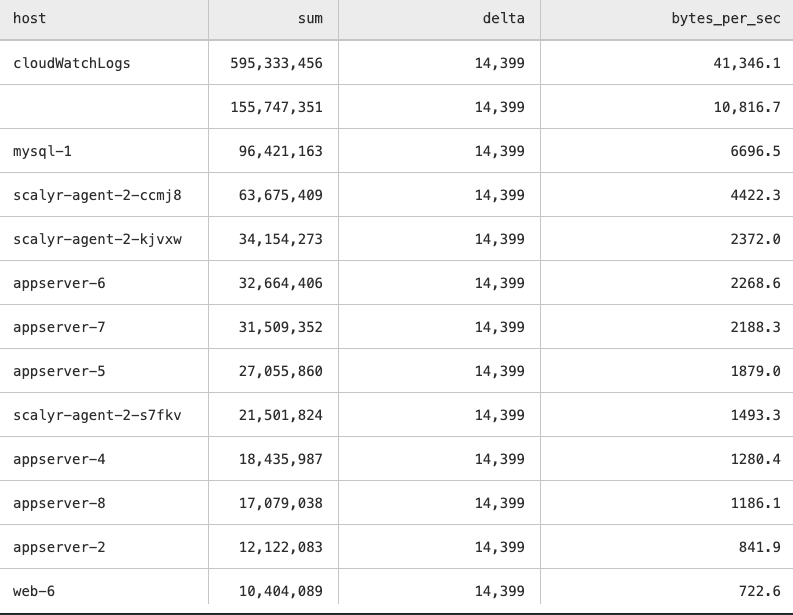

Practical ExampleWe can perform some pretty useful table calculations with this technique. An example of this is calculating a rate. We can calculate bytes per second from log data and log volume meta data.

Take the In the following example, the first search is looking for log volume meta data tag='logVolume' metric='logBytes' .The second, message=* is looking for all log messages. We get the sum from the former and the max and min timestamps from the latter.

We do a self join on key by defining key = “1”. We now calculate the [delta between the two timestamps](insert link here) and set it to delta and then subtract the sum from the delta which gives us the bytes/sec. This logic is with log data but has a multitude of applications.

| join

(tag='logVolume' metric='logBytes'

| group sum = sum(value) by host, key = "1"),

(message=*

|group mint = min(timestamp), maxt = max(timestamp) by key = "1")

on

key

| let delta = (maxt - mint)/1000000000

| columns host, sum, delta, bytes_per_sec = sum/delta| sort -bytes_per_se