The widespread adoption of AI introduces unique security considerations. Misconfigurations in AI infrastructure and unsecured APIs can create weaknesses that cybercriminals will exploit. In this blog post, we dive deep into how SentinelOne’s agentless AI Security Posture Management (AI-SPM) solution addresses these challenges by offering AI inventory automation, misconfiguration detection, and attack path analysis to help organizations secure their AI workloads effectively.

More and more organizations are deploying generative AI (GenAI) models on public clouds like AWS due to their on-demand scalability, specialized infrastructure like high-performance GPUs and TPUs, and AI management platforms like Amazon SageMaker, Amazon Bedrock, Azure OpenAI, and Google Vertex AI.

This trend is causing global investment in artificial intelligence (AI) to grow rapidly, with IDC’s Worldwide AI and Generative AI Spending Guide projecting spending on AI-enabled applications, infrastructure, and services to more than double by 2028, reading $632 billion at a compound annual growth rate (CAGR) of 29%. This surge will account for almost 40% of overall public cloud spending within the next three years.

Securing Against Evolving AI Threats

As our dependence on AI technology scales up, it becomes more attractive for cybercriminals to target. Threat actors are looking for new ways to exploit misconfigured AI infrastructure, taking advantage of security gaps to manipulate models or steal sensitive data. This evolving threat requires organizations to secure their AI systems from novel and existing risks proactively.

One of the most common AI-related security threats is data theft. For example, if an AI developer creates an Amazon Bedrock training job to train a machine learning model but fails to attach it to a Virtual Private Cloud (VPC), this misconfiguration could expose the job to the Internet. A case like this could allow adversaries to intercept or access sensitive training data, potentially compromising personally identifiable information (PII) or confidential business data. Additionally, insecure API endpoints for AI models can allow threat actors to interact directly with the models, potentially causing model misuse.

How SentinelOne Protects AI Workloads

SentinelOne’s AI-SPM solution, available as part of Cloud Native Security (CNS), helps address the evolving risks associated with GenAI. AI-SPM was designed from the ground up to safeguard your AI models and pipelines deployed on managed AI services such as Amazon SageMaker, Amazon Bedrock, Azure OpenAI, and Google Vertex AI. SentinelOne’s AI-SPM delivers three primary outcomes.

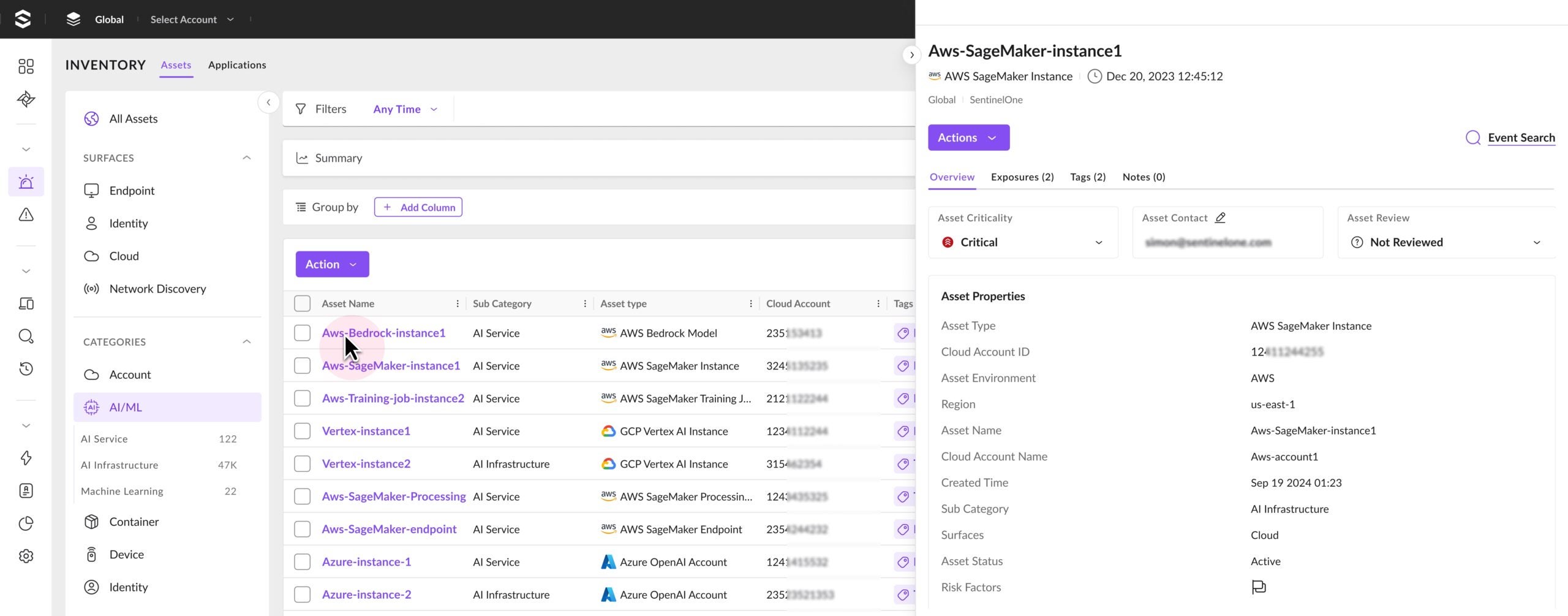

Automated Inventory of AI Infrastructure

AI-SPM discovers AI services such as machine learning (ML) models, training and processing jobs, and pipelines. For example, if your organization uses Amazon SageMaker to manage your AI/ML pipeline, you will gain end-to-end visibility into the SageMaker notebook instances, SageMaker endpoints, and deployed models in that pipeline.

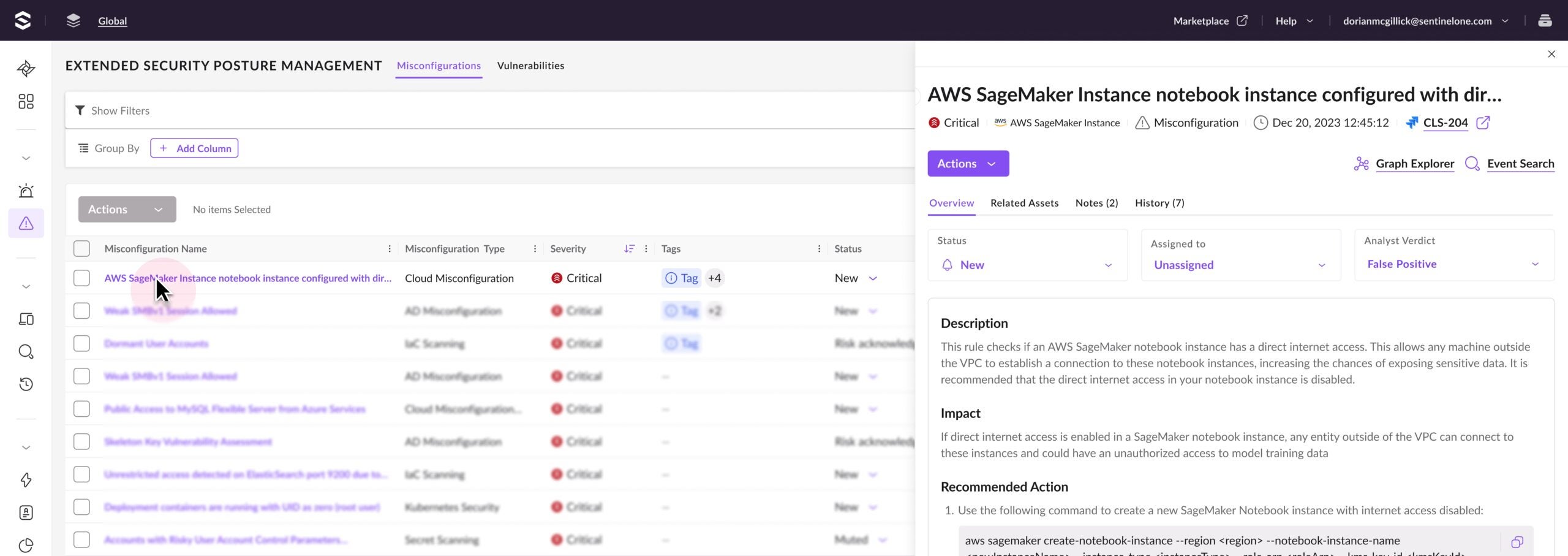

Detection of AI-Native Misconfigurations

AI-SPM’s built-in security rules provide insights into misconfigurations across AI services like AWS SageMaker, Bedrock, Azure OpenAI, and Google Vertex AI. For example, if an Amazon SageMaker notebook instance is configured with direct internet access, AI-SPM generates an exposure and recommends actions to address it. Additionally, support for frameworks like EU AI Act and NIST AI Risk Management Framework helps CNS clients ensure that AI workloads are compliant with AI security standards.

Actively Resolve Potential Issues with Attack Paths

By visualizing attack paths related to AI workloads, you can see exactly how an adversary could traverse your environment and potentially move laterally to gain access to resources.

Conclusion

As global investment in AI continues to climb, promising on-demand scalability and specialized AI infrastructures, organizations will focus on managing the unique security challenges that come with it. Misconfigured AI systems, such as exposed endpoints or improper access controls, are low-hanging fruit that threat actors look to exploit, potentially leading to model manipulation or data compromise. Proactive security measures like SentinelOne’s AI-SPM become key in protecting business-critical data and ensuring the integrity of AI workloads across quickly changing AI-based threats.

Keen to learn more about SentinelOne’s cloud security capabilities? Visit us at Booth #1632 at the upcoming AWS re:Invent conference and see SentinelOne’s AI-SPM in action. Enjoy hands-on demos with product experts, our Mortal vs. Machine challenge, big prize giveaways, and the chance to stock up on the latest SentinelOne swag.