ATTENTION: This solution has been deprecated

Please see our current GCP integration here: https://support.scalyr.com/hc/en-us/articles/4404098324115-Getting-Started-with-Pub-Sub-Monitor

Stackdriver aggregates metrics, logs, and events from Google Cloud infrastructure and various other resources on the platform. Those observable signals offer valuable insights to your applications and systems, and those signal data can be easily ingested to Scalyr using Google Cloud’s Pub/Sub and Cloud Function. Here are the steps to send GCP logs to Scalyr.

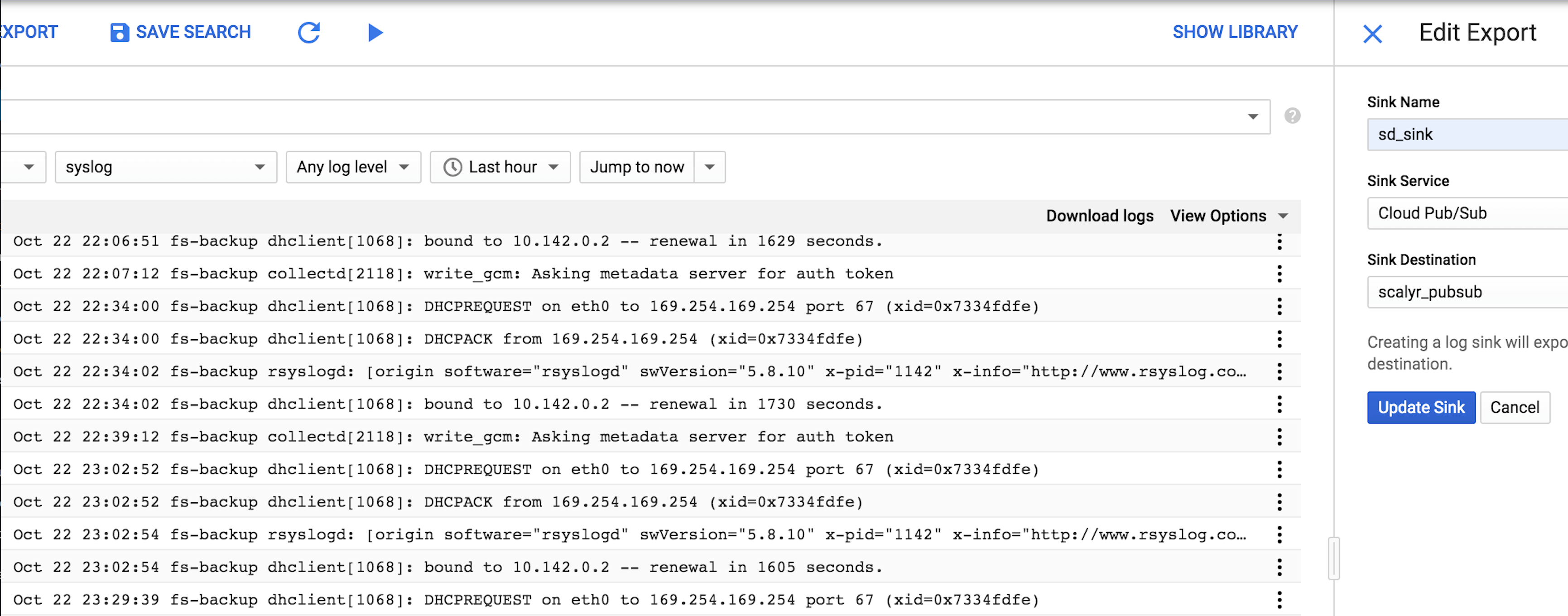

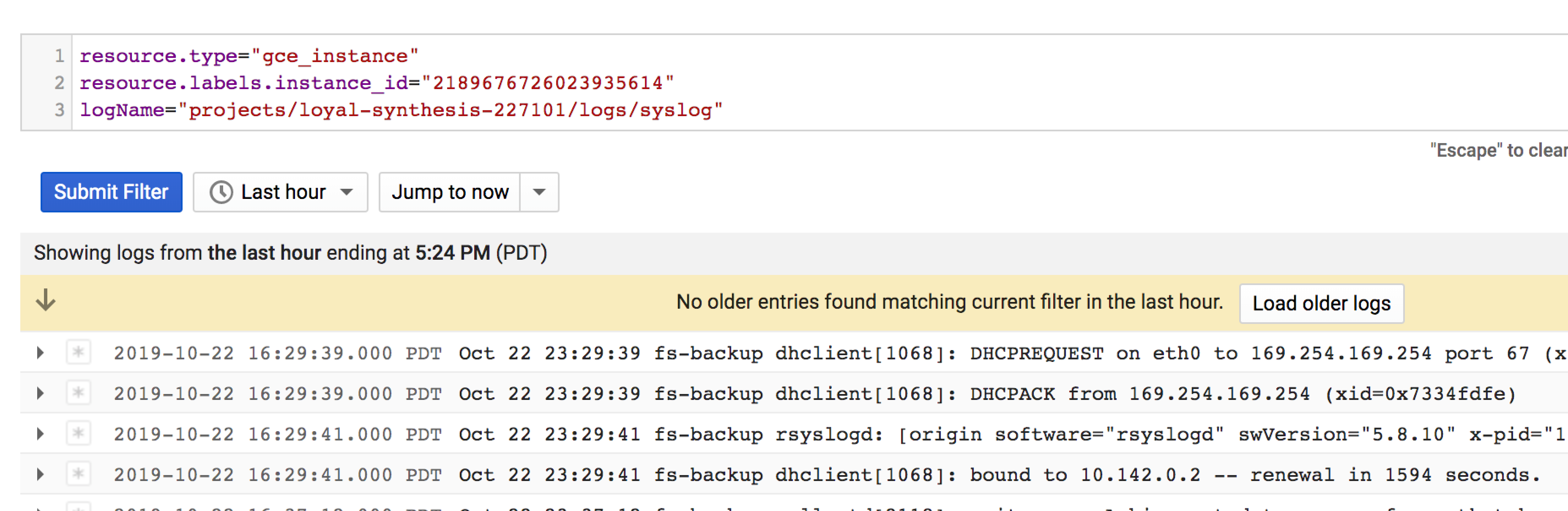

Setup an export for StackDriver logs

Select the types of logs (e.g. GKE Load Balance, Compute Engine, Cloud Functions, etc) to export from using the drop-down menu on the StackDriver logs viewer page. The services selected will be used as the search filter of logs dump.

The export job is called a “Sink”. Three parameters are required to create a Sink.

- Sink Name: <DEFINE YOUR OWN SINK NAME>

- Sink Service: Pub/Sub

- Sink Destination: <DEFINE YOUR OWN PUB/SUB TOPIC>

The Sink filter is based on the services selected from the StackDriver logging page. The following example uses sd_sink to export Syslog from a GCE VM Instance (fs-backup)

You can also change the Sink condition after it has been created (select Exports on the left panel -> right-click sd_ink -> edit Sink).

Now, we are ready to set up the Cloud Functions to consume messages from the Pub/Sub topic.

Link the Pub/Sub topic to Cloud Function

Go to Cloud Functions and fill in the following parameters.

- Memory allocated: 512MB

- Trigger: Cloud Pub/Sub

- Topic: the Pub/Sub topic created in step 1

- Source code: inline editor

- Runtime: python 3.7

- Function to execute: scalyr_pubsub

Copy and paste the following content to requirements.txt and main.py. Notes: replacing api_token, serverHost, logfile and parser based on your own settings.

- requirements.txt

google-cloud-pubsub==0.45.0

requests==2.22.0

- main.py

def scalyr_pubsub(event, context):

"""Background Cloud Function to be triggered by Pub/Sub.

Args:

event (dict): The dictionary with data specific to this type of event. The `data` field contains the PubsubMessage message. The `attributes` field will contain custom attributes if there are any.

context (google.cloud.functions.Context): The Cloud Functions event metadata. The `event_id` field contains the Pub/Sub message ID. The `timestamp` field contains the publish time.

"""

import base64

import json

import requests

print("""This Function was triggered by messageId {} published at {} """.format(context.event_id, context.timestamp))

if 'data' in event:

event = base64.b64decode(event['data']).decode('utf-8')

e = json.loads(event)

# API parameters

serverHost = 'your server name'

logfile = 'your log file name'

api_token = 'XXXXX'

parser = 'your parser name'

# scalyr host

scalyr_server = 'https://www.scalyr.com'

# Ingest logs to Scalyr

### DO NOT USE THE FOLLOWING UPLOAD LOGS API IN PRODUCTION. THE CODE SNIPPET IS MERELY A DEMO FOR INGESTING SMALL AMOUNT OF LOGS TO SCALYR ###

'''

headers = {'Content-Type': 'text/plain'}

r = requests.post('{scalyr_server}/api/uploadLogs?token={api_token}&host={serverHost}&logfile={logfile}&parser={parser}'.format(scalyr_server=scalyr_server,api_token=api_token,serverHost=serverHost,logfile=logfile,parser=parser),data=e['textPayload'],headers=headers)

if (r.status_code != 200):

print('Status:{}, Raw event:{}'.format(r.status_code, event))

else:

print ("unknown format")

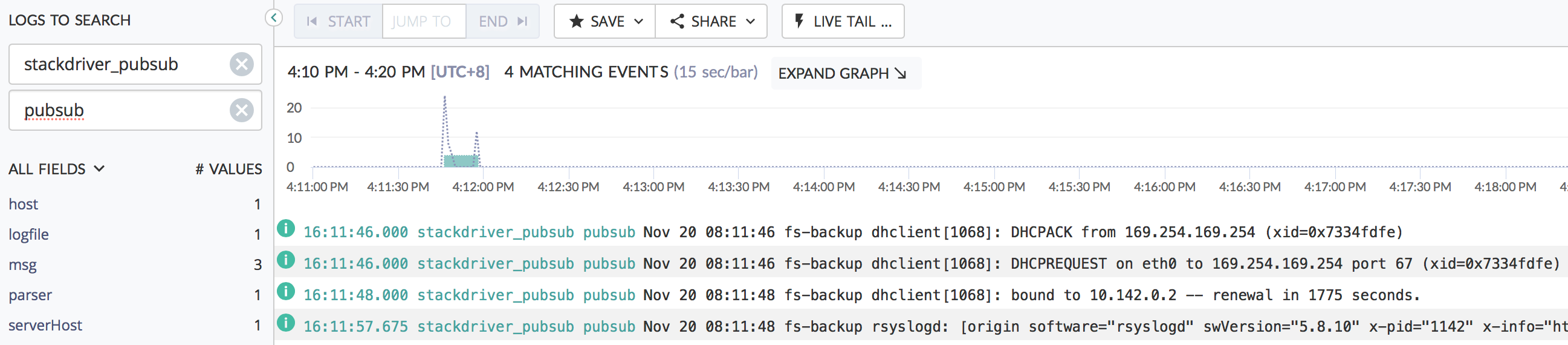

'''Please refer to our previous blog post for more information on Cloud Function. Finally, you can go to scalyr.com to verify that GCP logs are ingested to Scalyr successfully.

This instruction is merely a starting point to get your GCP logs to Scalyr. The more performant and robust integrations are on our roadmap. Feel free to reach out if you are interested in it. We’d love to hear your feedback.